Google Autocomplete Suggestions are one of the quickest ways to get keyword ideas in bulk.

In This Post, we will learn to scrape Google Autocomplete Suggestions using Node JS.

Requirements for Scraping Autocomplete

User Agents

User-Agent is used to identify the application, operating system, vendor, and version of the requesting user agent, which can save help in making a fake visit to Google by acting as a real user.

You can also rotate User Agents.

If you want to further safeguard your IP from being blocked by Google, try these Tips to avoid getting Blocked while Scraping Google.

Install Libraries

To scrape Google Autocomplete, we need to install some NPM libraries to move forward.

So before starting, we have to ensure that we have set up our Node JS project and installed the Unirest JS library. You can get this package from the above link.

Or you can run this command in your terminal to install this package.

npm i unirest

Process

We will use an npm library Unirest JS to make a get request to our target URL so we can get our raw HTML data.

We will target this URL:

https://www.google.com/complete/search?&hl=en&q=coffee&gl=us&client=chrome

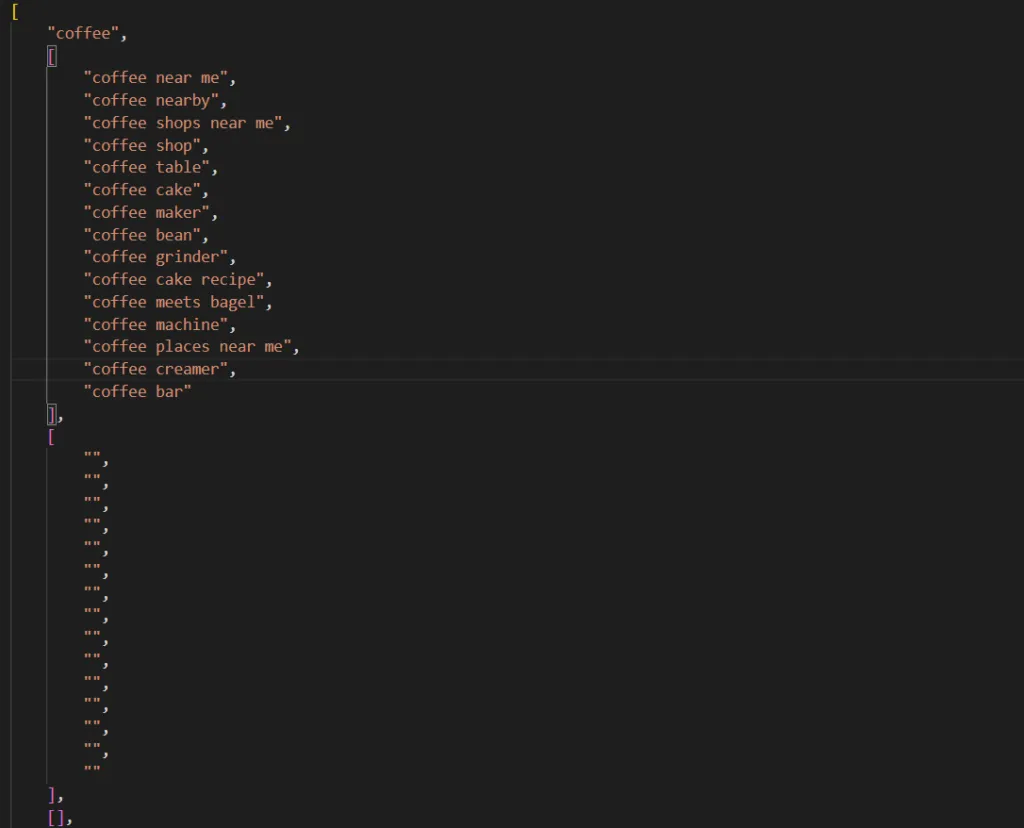

Copy this URL into your browser and press enter. You will see a text file downloading on your device. Open this file in your respective code editor in JSON format.

Understand the structure of this data. We will use this structure in a bit, to create our code and extract information from it.

Then, we will import the library and define a function that will help us to scrape the data from Google Autocomplete.

const unirest = require("unirest");

const searchSuggestions = async () => {

try {

const response = await unirest

.get("https://www.google.com/complete/search?&hl=en&q=coffee&gl=us&client=chrome")

.headers({

"User-Agent":

"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/101.0.4951.54 Safari/537.36",

})

In the above code, we used unirest to make an HTTP GET request on our target URL, and we also passed User Agent as the header with the request so that our bot mimics an organic user.

Next, we will parse this extracted data by converting the extracted JSON string present in the response.body into the JavaScript Object.

let data = JSON.parse(response.body);

Then we will run a for loop over the data variable to filter out the required information.

let suggestions = [];

for (let i = 0; i < data[1].length; i++) {

suggestions.push({

value: data[1][i],

relevance: data[4]["google:suggestrelevance"][i],

type: data[4]["google:suggesttype"][i],

});

}

const verbatimRelevance = data[4]["google:verbatimrelevance"]

console.log(suggestions)

console.log(verbatimRelevance)

} catch (e) {

console.log("Error : " + e);

}

};

searchSuggestions();

Run this code in your terminal. It will return the desired results:

[

{ value: 'coffee near me', relevance: 1250, type: 'QUERY' },

{ value: 'coffee shops near me', relevance: 650, type: 'QUERY' },

{ value: 'coffee shop', relevance: 601, type: 'QUERY' },

{ value: 'coffee table', relevance: 600, type: 'QUERY' },

{ value: 'coffee maker', relevance: 553, type: 'QUERY' },

{ value: 'coffee bean', relevance: 552, type: 'QUERY' },

{ value: 'coffee grinder', relevance: 551, type: 'QUERY' },

{ value: 'coffee meets bagel', relevance: 550, type: 'QUERY' }

]

1300

Using Scrapingdog's Google Autocomplete API

Scraping keyword suggestions in bulk requires a dedicated infrastructure capable of handling proxy rotation and a large number of requests.

Our Google Autocomplete API has already covered all these requirements, allowing our customers to extract keyword suggestions in bulk without hassle.

We provide 1000 free credits for the first time. You can easily test the API on Google Autocomplete.

const axios = require('axios');

axios.get('https://api.scrapingdog.com/google_autocomplete?api_key=APIKEY&query=football&country=us')

.then(response => {

console.log(response.data);

})

.catch(error => {

console.log(error);

});

Results:

{

"meta": {

"api_key": "APIKEY",

"q": "football",

"gl": "us"

},

"suggestions": [

{

"value": "football cleats",

"relevance": 601,

"type": "QUERY"

},

{

"value": "football games",

"relevance": 600,

"type": "QUERY"

},

{

"value": "football wordle",

"relevance": 555,

"type": "QUERY"

},

{

"value": "football today",

"relevance": 554,

"type": "QUERY"

},

{

"value": "football gloves",

"relevance": 553,

"type": "QUERY"

},

{

"value": "football field",

"relevance": 552,

"type": "QUERY"

},

{

"value": "football movies",

"relevance": 551,

"type": "QUERY"

},

{

"value": "football positions",

"relevance": 550,

"type": "QUERY"

}

],

"verbatim_relevance": 1300

}

Storing Data in CSV file

We can conveniently store Autocomplete Results in either JSON or CSV format, ensuring better readability and safe storage.

const axios = require('axios');

const fs = require('fs');

axios.get('https://api.scrapingdog.com/google_autocomplete?api_key=APIKEY&query=football&country=us')

.then(response => {

const suggestions = response.data.suggestions;

const csvData = suggestions.map(item => `${item.value},${item.relevance},${item.type}`).join('\n');

fs.writeFileSync('suggestions.csv', csvData);

console.log('CSV file created successfully.');

})

.catch(error => {

console.log(error);

});

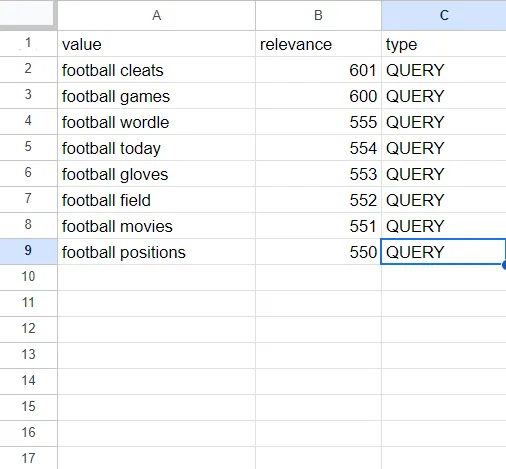

This will return you the following CSV file.

Conclusion:

Keyword tracking is crucial for verifying whether your website’s selected keywords appear on the correct pages. Additionally, you can also improve your content optimization efforts by monitoring keywords.

In this tutorial, we learned to scrape Google Autocomplete Suggestions using Node JS. Feel free to message me anything you need clarification on.

Follow us on Twitter. Thanks for reading!

Additional Resources:

- Web Scraping with Nodejs

- Web Scraping Google Jobs using Nodejs

- Web Scraping People Also Ask Using Python & Google SERP API

- Web Scraping Google Search Results using Python

- Web Scraping Google Images using Python

- Web Crawling with Nodejs

- Automate Content Briefing using Scrapingdog’s Google Autosuggest API & Make.com