The lead generation market is booming in 2025, and hiring an agency can easily set you back around $1,000 — or more — to find quality leads. But what if there was a smarter way to tap into publicly available data on Google using simple search operators?

In this tutorial, I’ll walk you through extracting “fashion influencer” leads from Instagram, gathering their email addresses, and setting up an automated system to send targeted outreach messages.

The same approach works for nearly any niche — you just need to pick the right platform. For instance, B2B brands may use LinkedIn to gather relevant contact details of key decision-makers — like CEOs, CFOs, or Directors — so they can directly pitch products or services to the right people.

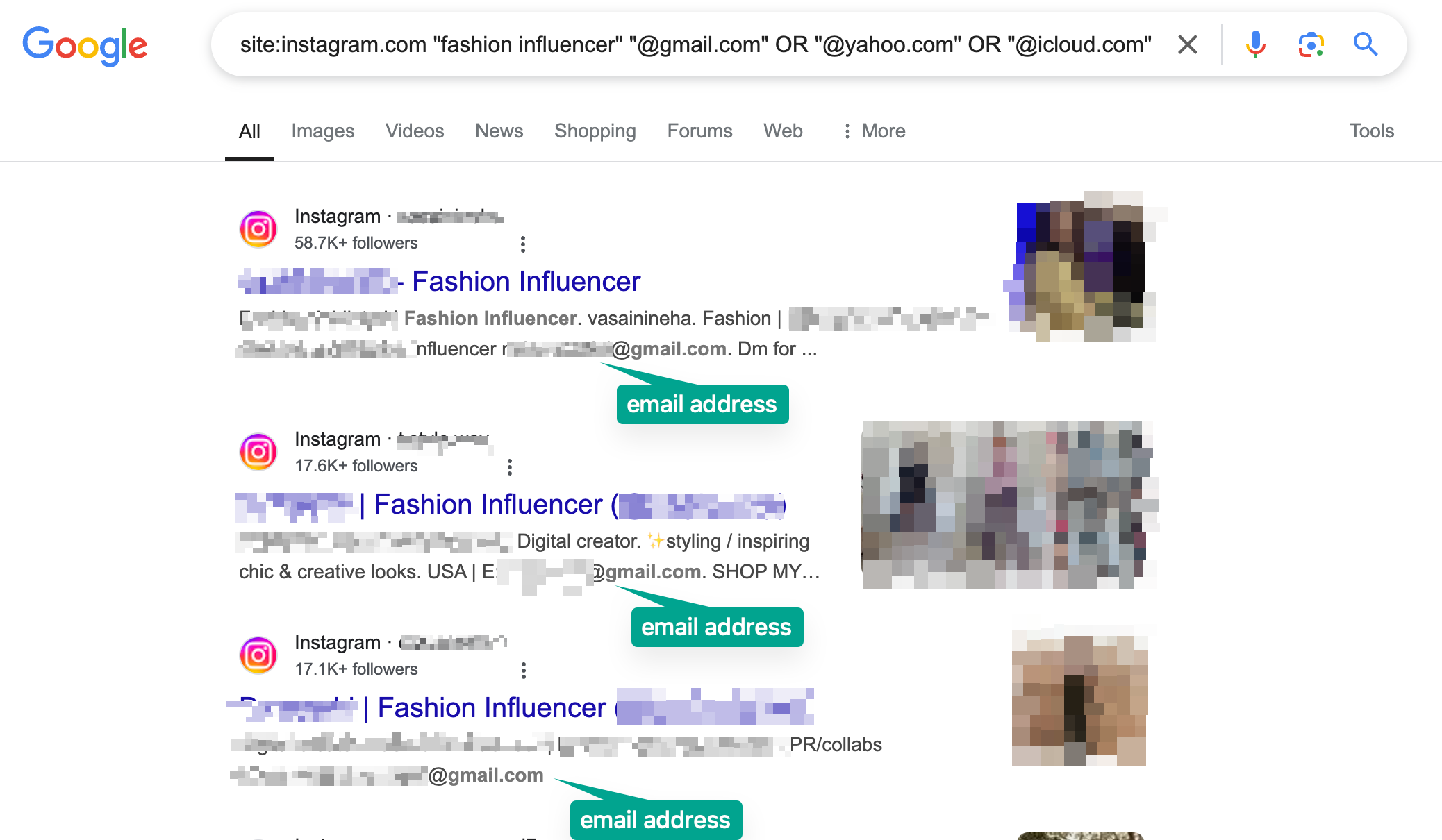

For the sake of this tutorial, we will be using Instagram for fashion influencers, below is how our query with search operators would look like:

site:instagram.com "fashion influencer" "@gmail.com" OR "@yahoo.com" OR "@icloud.com"

This is what you will get in the Google Search result.

We are getting emails for all the influencers that are in the description.

You can add more email providers in the search query above, just use the ‘OR’ operator in between, just like we have in there for our query.

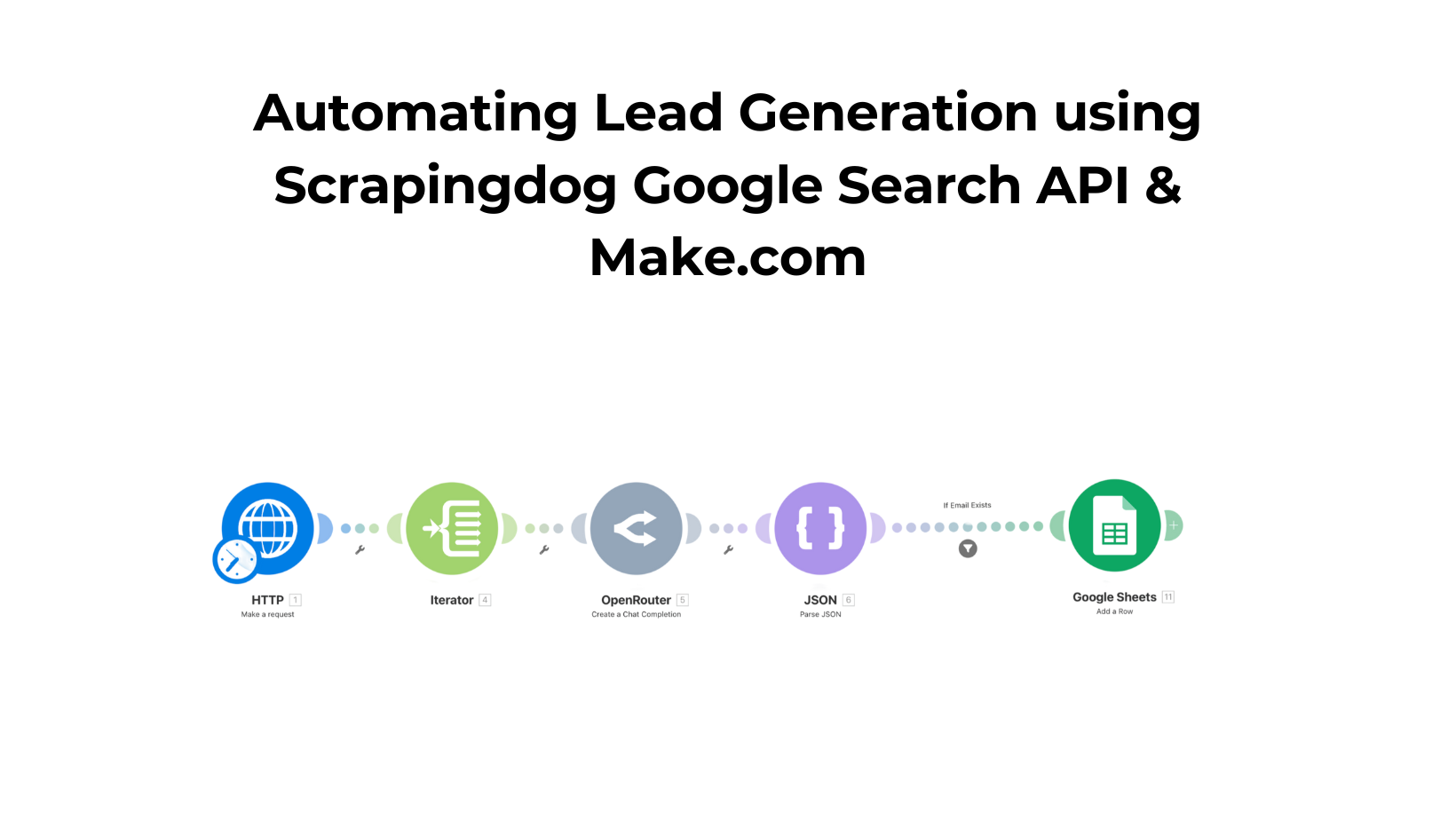

For this whole process, we will be preparing 2 Make.com Scenarios for this whole task. Here’s how both would work.

- Scrape Google search results using our operators to retrieve email addresses.

- Send an outreach message to each retrieved address.

In the very end, I will be giving you a blueprint to download the scenario & use it as is in your Make account.

Let’s start building this automation.

How This Automation Works

To build this automation you would need:

1. Make.com account

2. Scrapingdog account

We would be using Google’s data, we will retrieve this data in bulk, and to do this, we would use Google Search API in Make.com.

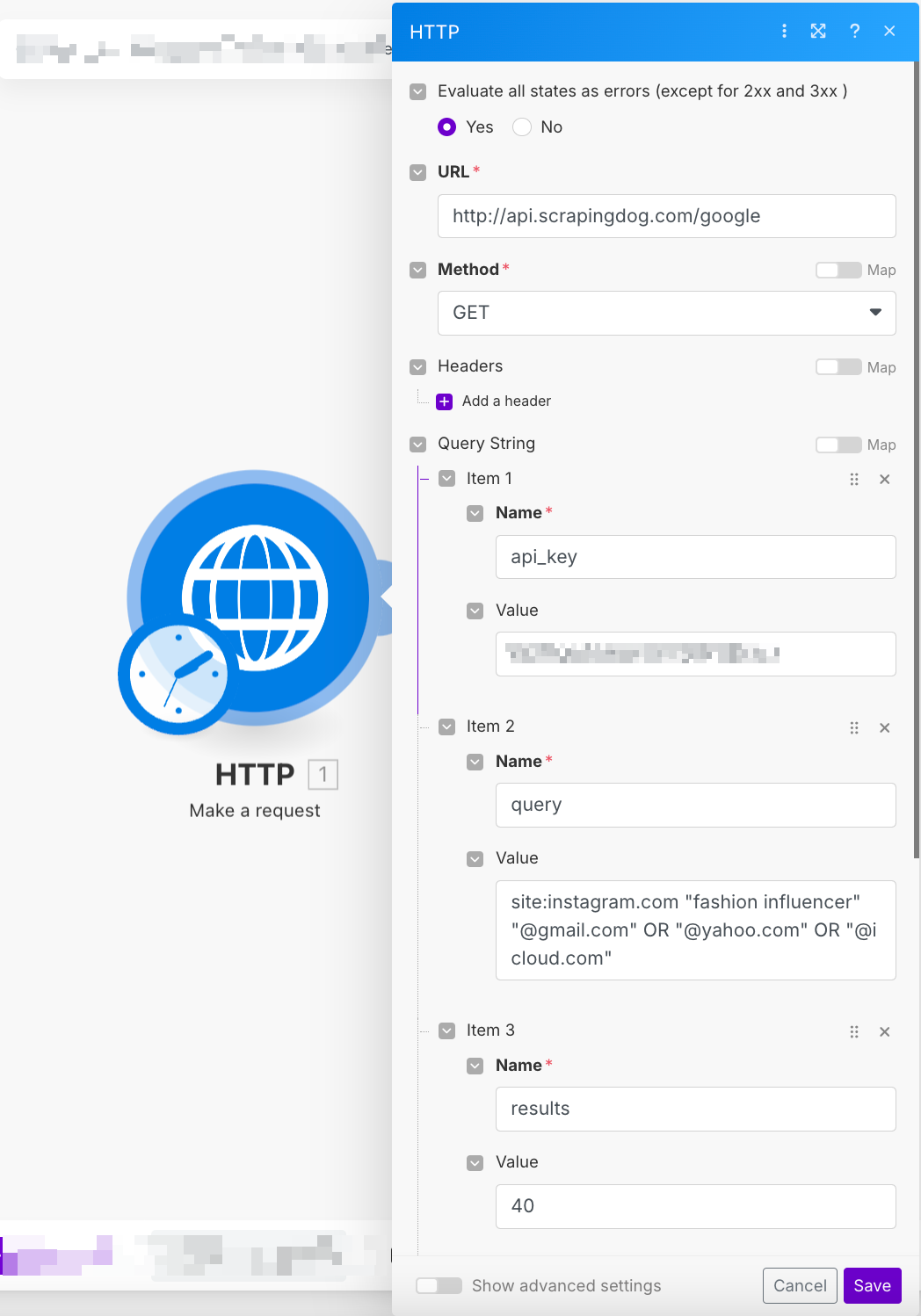

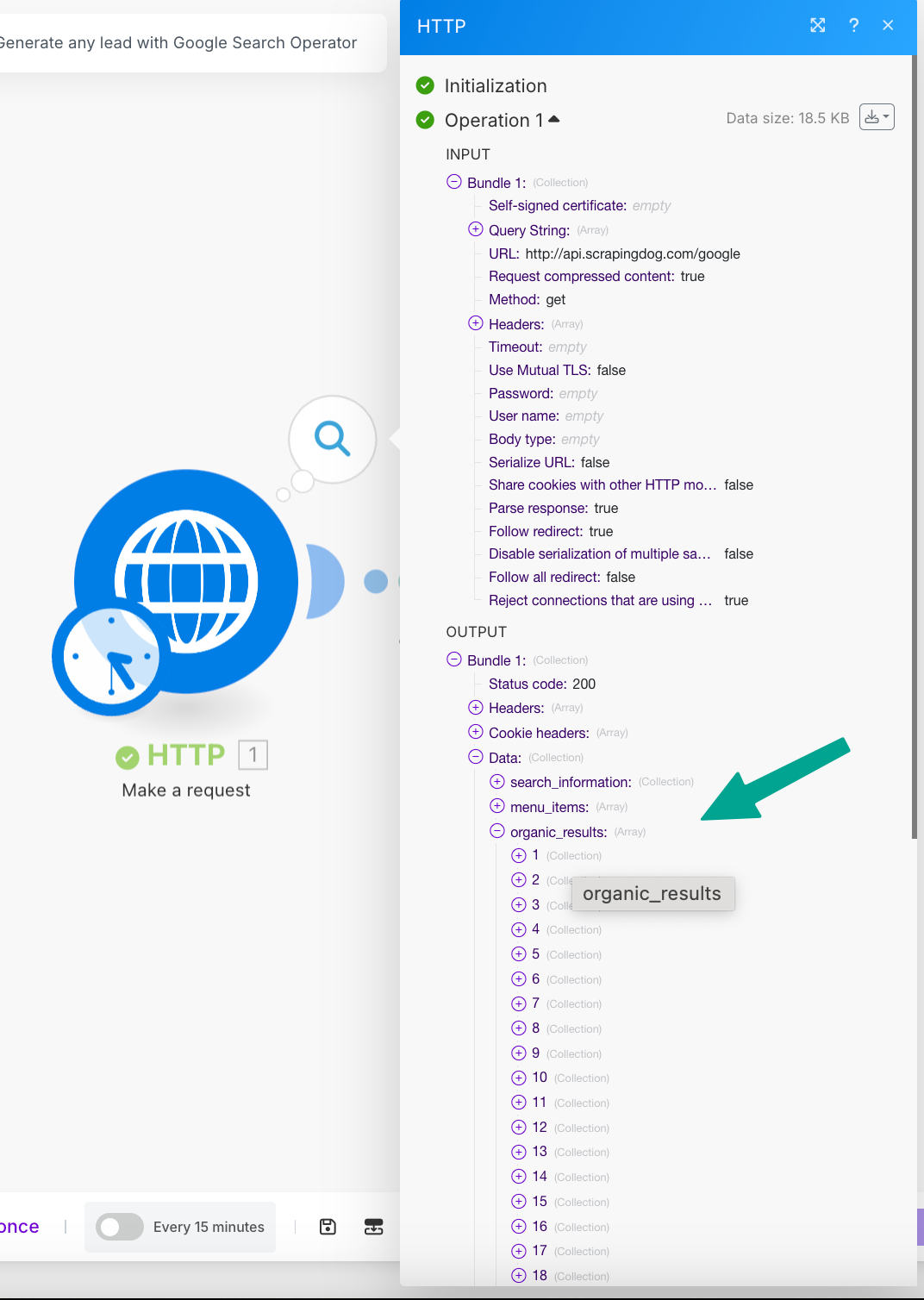

The first module we will be using is HTTP ➡️ Make a request

In this module, we will use the Google Search API endpoint to scrape Google search results.

You can read more about the input parameters for the Google search API here.

I am keeping the results restricted to 40, you can do it 100 to extract more leads.

Let’s test this module, as you can see in the image below, we got the data for 40 results.

The result is in an array, & therefore we will now use an iterator to get these results in the Bundle.

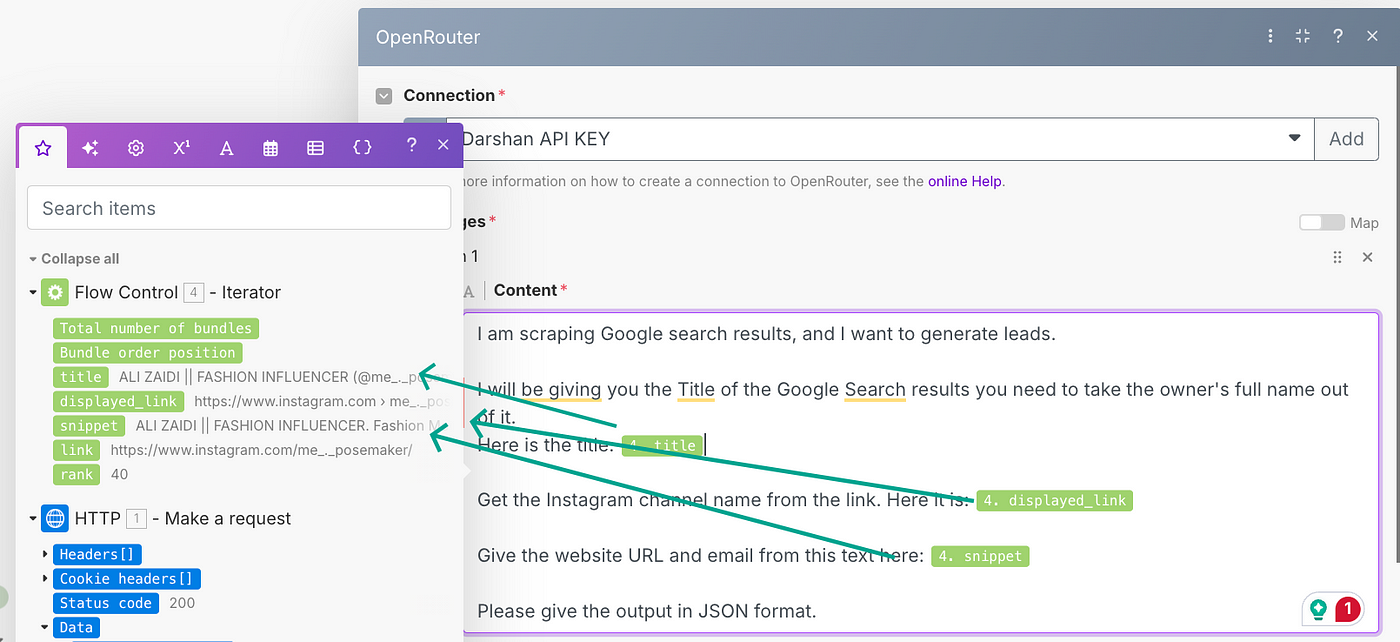

After the Iterator, I will give this data to OpenRouter, which has an AI module to derive relevant details.

This prompt I am giving to the ‘User’ Role ⬆️

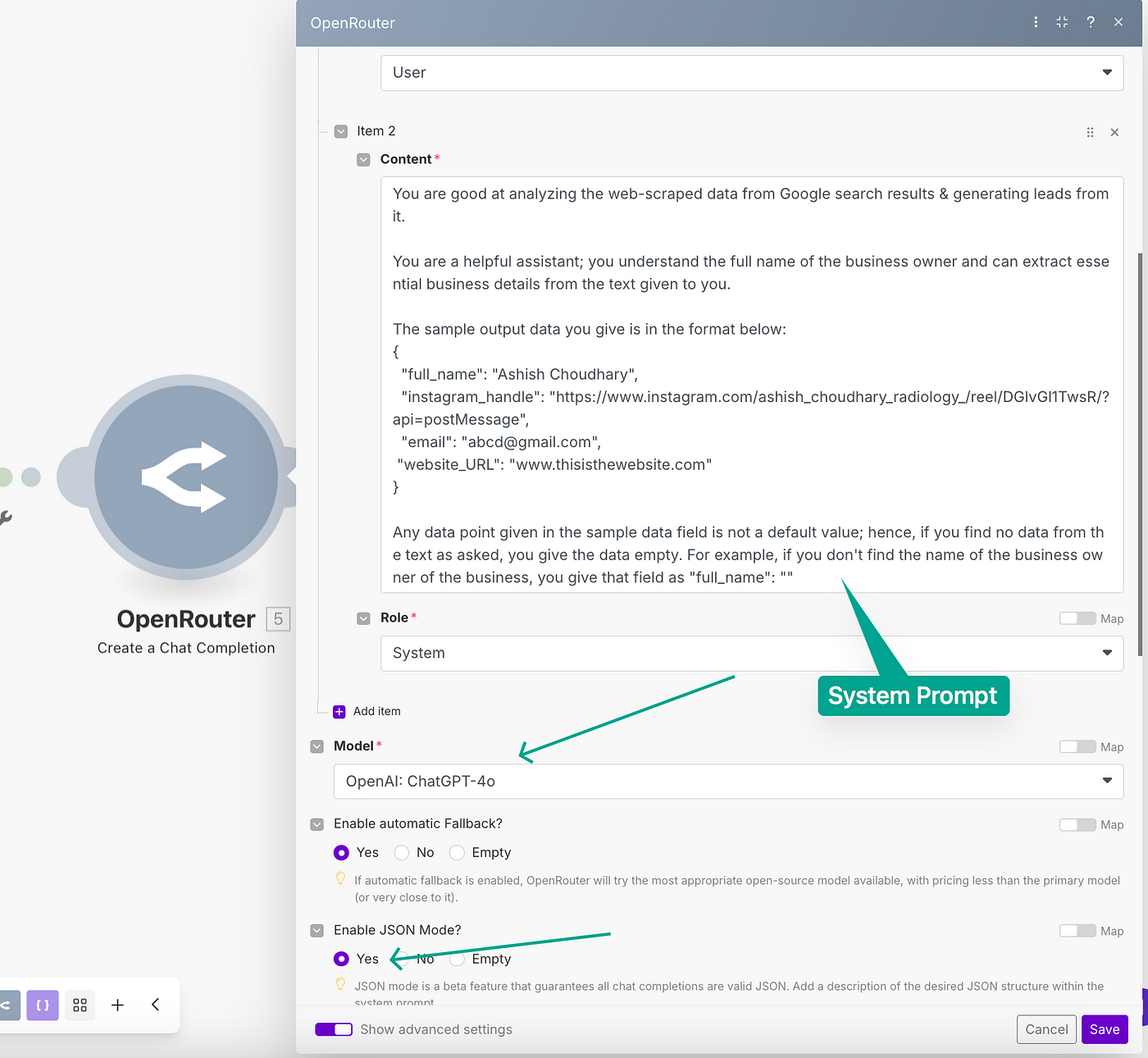

Now I will give one prompt to the ‘System’ Role ⬇️

The advanced setting is on in this module & I have enabled JSON mode. In the system prompt, I have also given the sample output I would want from the output of this module.

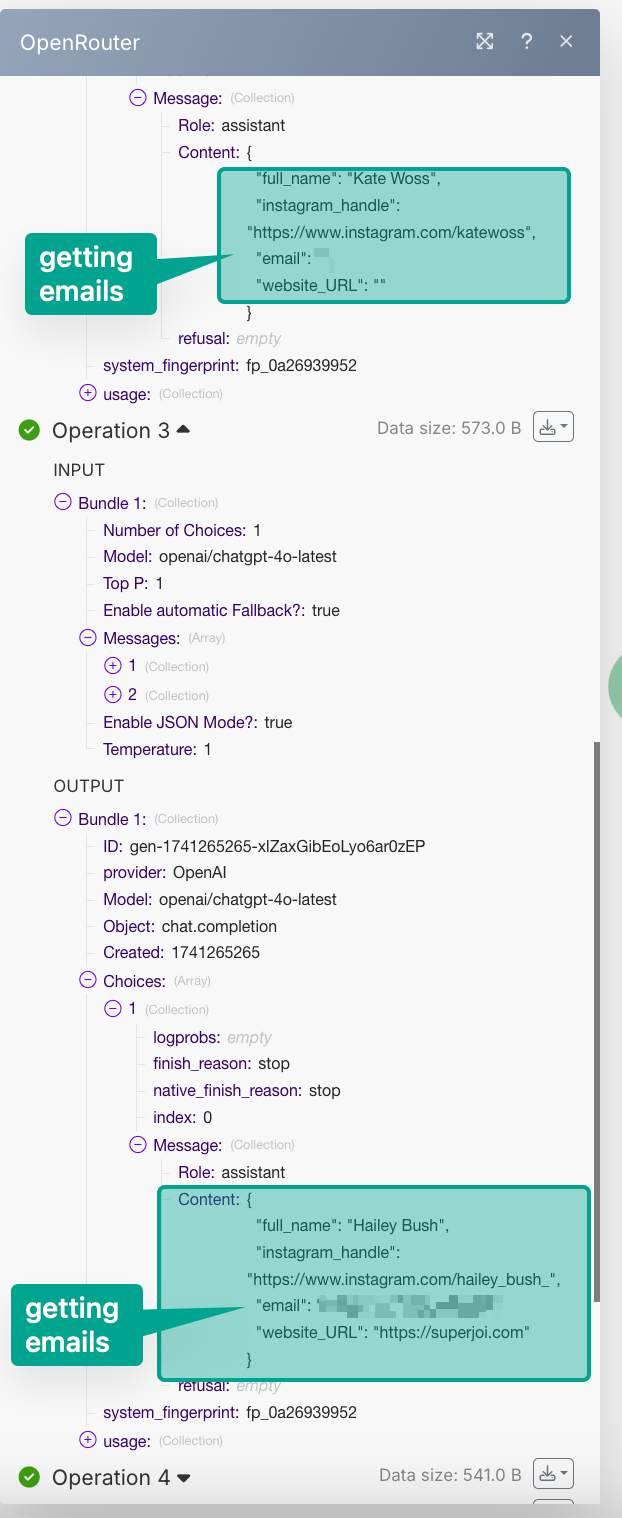

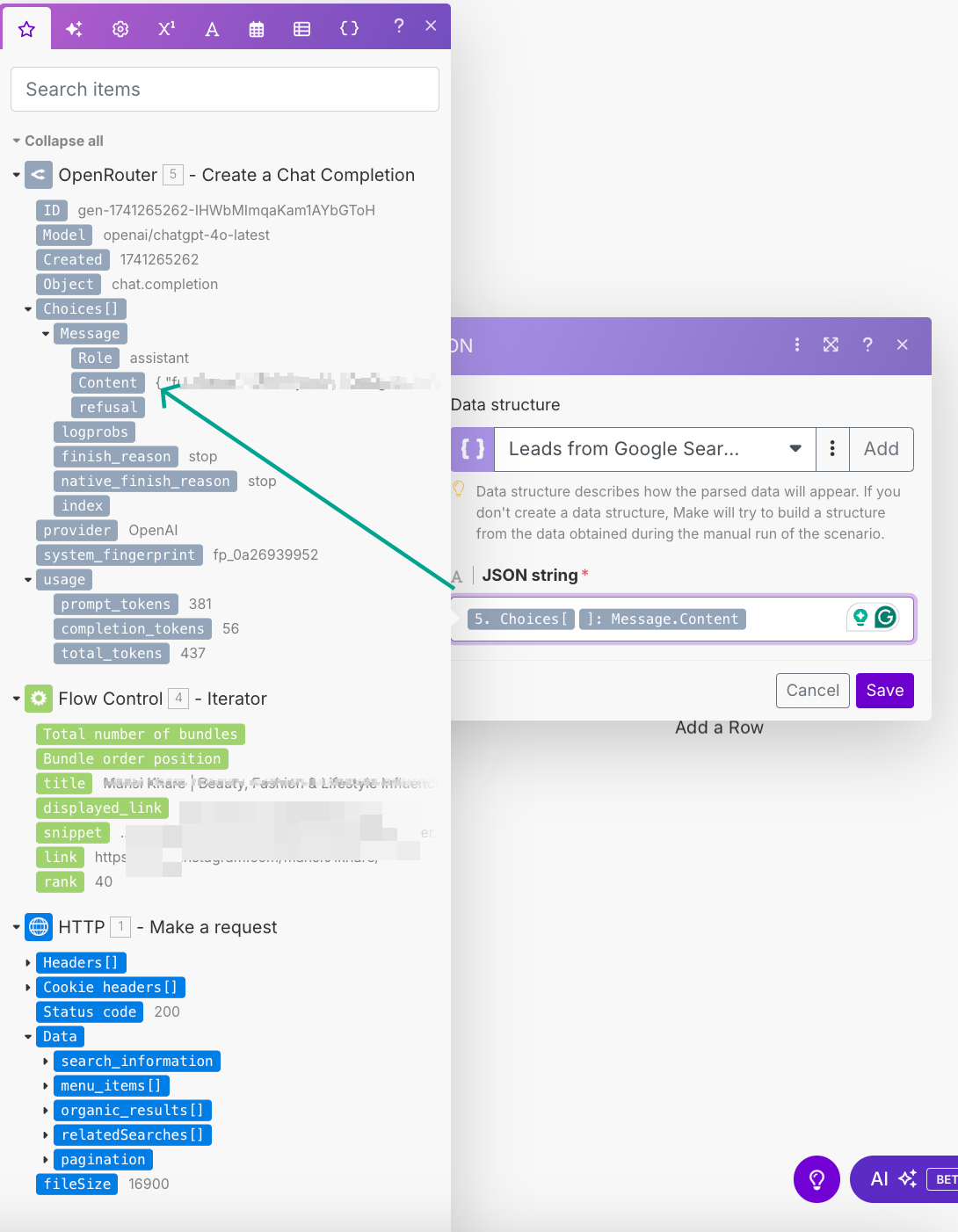

Let’s test our setup up to this AI module. And we are getting the desired details as you can see from the image below ⬇️

The data we have now is in JSON format, now we would need to parse this JSON Format.

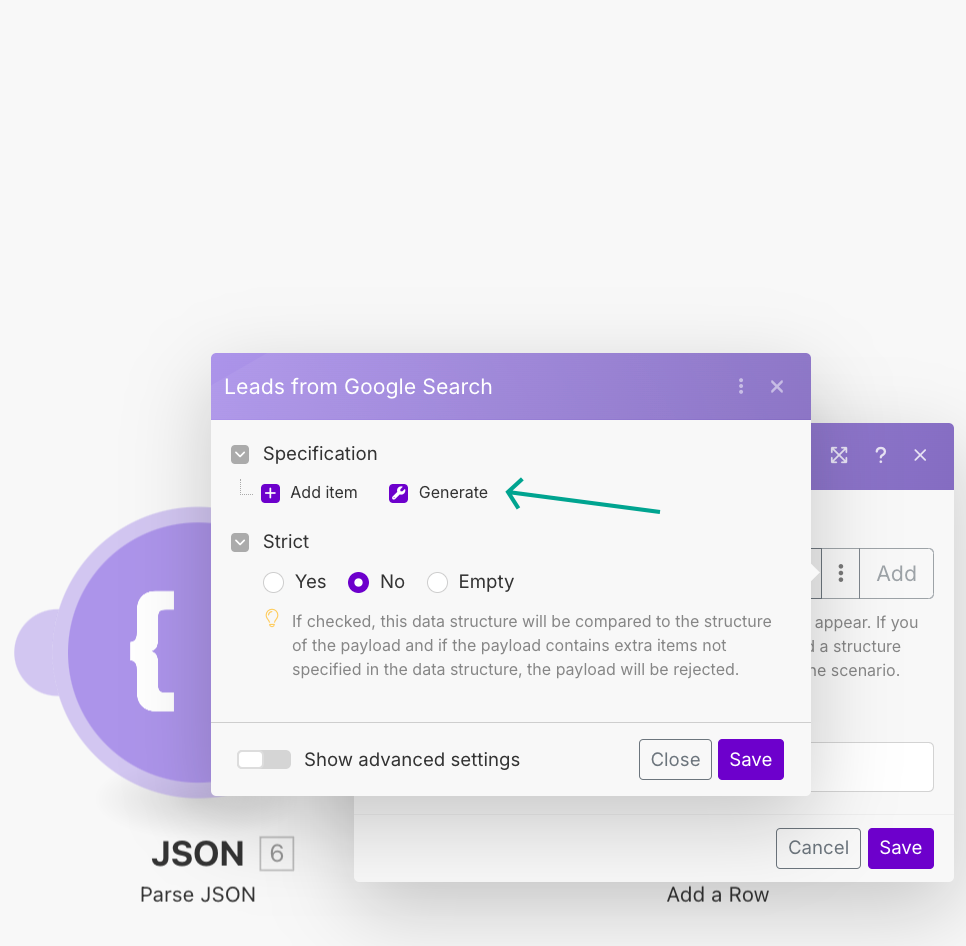

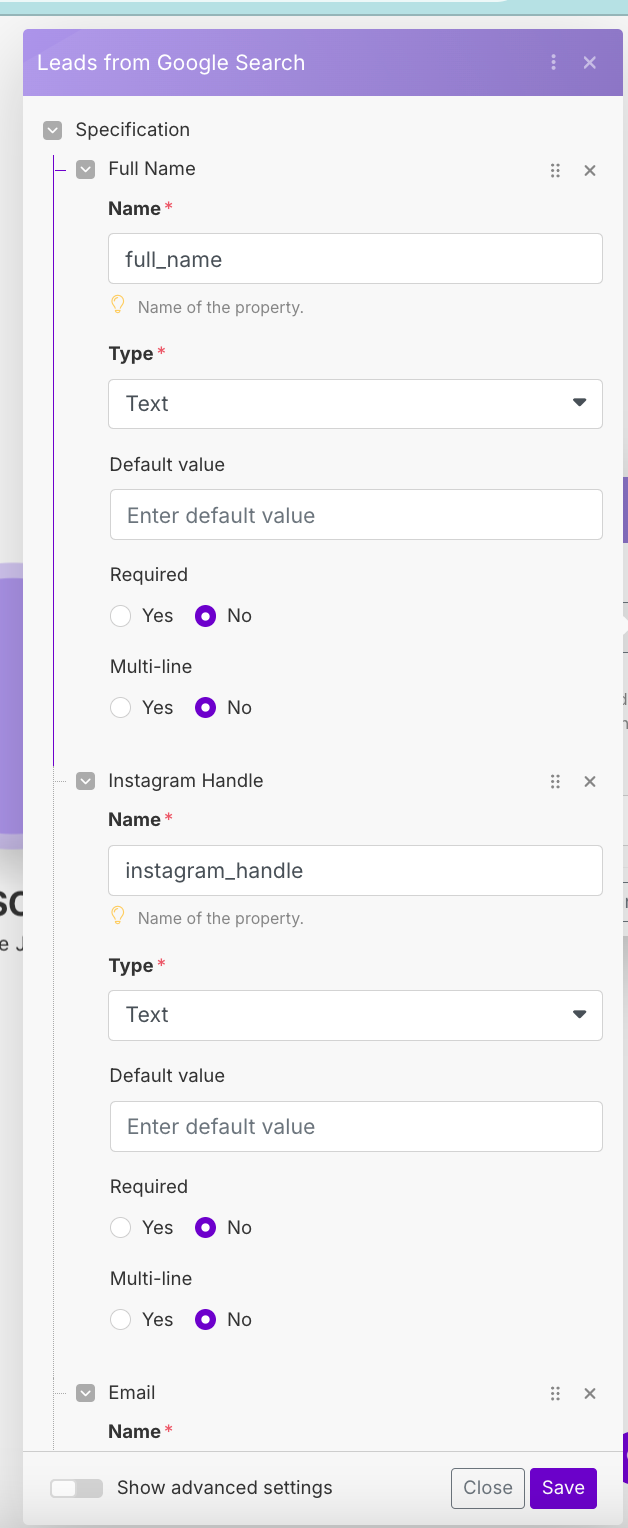

We will now parse this JSON data using a JSON parser. In this module, you need to feed in sample data to retrieve the data points, this sample data we will take from the OpenRouter (as we fed it too).

Click ‘Generate’ & paste the sample data. Here is the data that we used in OpenRouter too:

{

"full_name": "Ashish Choudhary",

"instagram_handle": "https://www.instagram.com/ashish_choudhary_radiology_/reel/DGlvGl1TwsR/?api=postMessage",

"email": "abcd@gmail.com",

"website_URL": "www.thisisthewebsite.com"

}

This module will define the data name and its type automatically.

You can save this for further use also.

Now map the JSON string you want to parse in this module. ⬇️

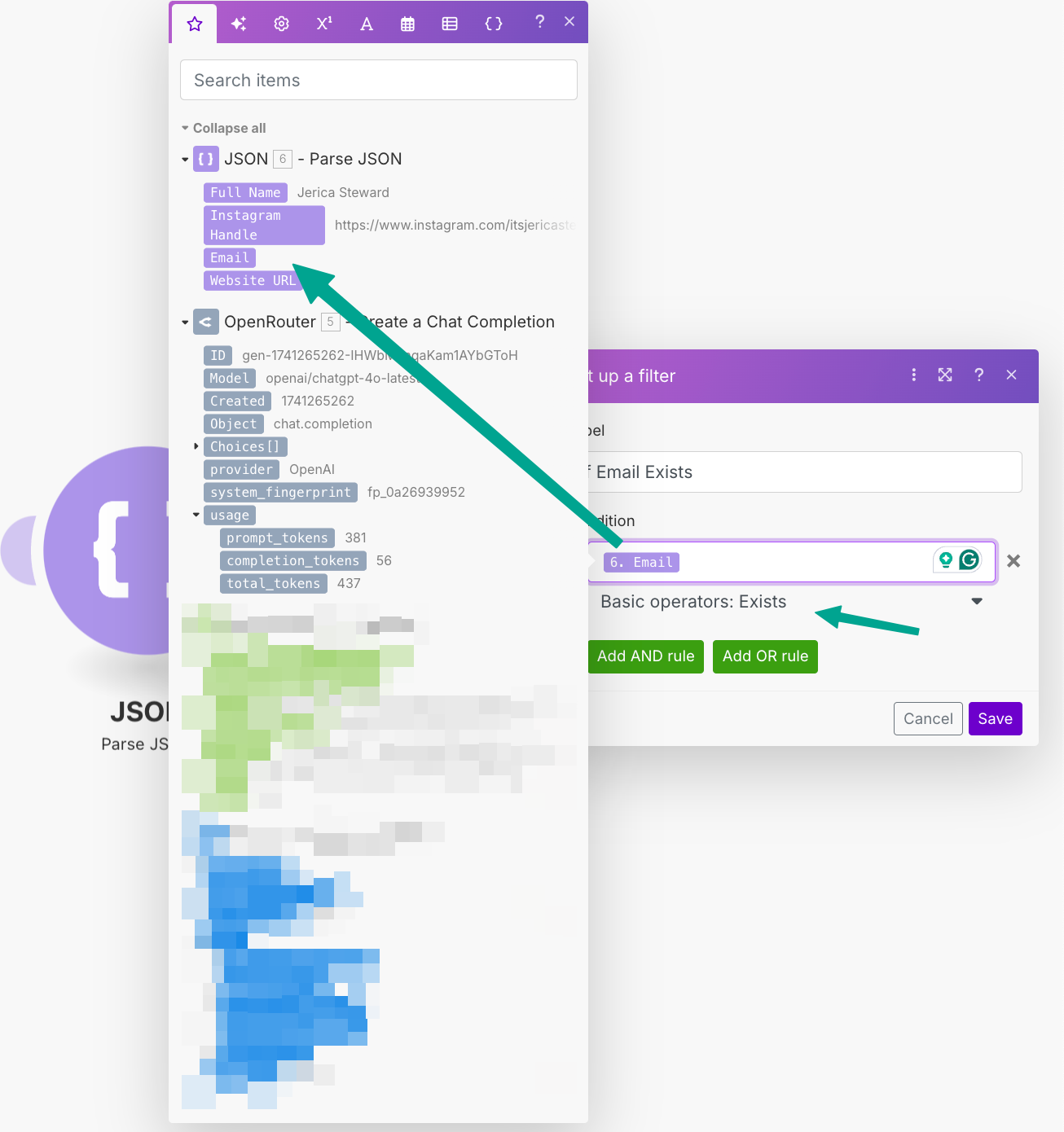

Once this data gets parsed, we need to filter out results that don’t give emails, since our AI module is taking emails from the description of the search results.

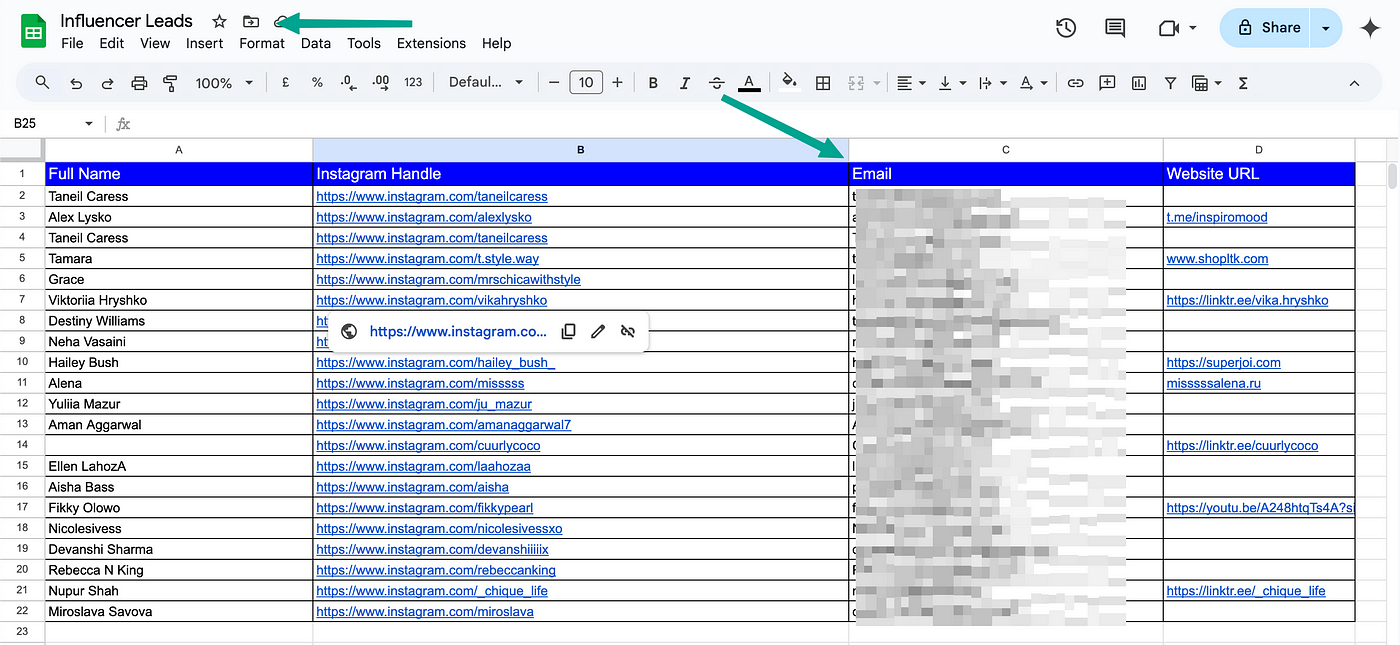

And finally, take this data in our Google Sheet.

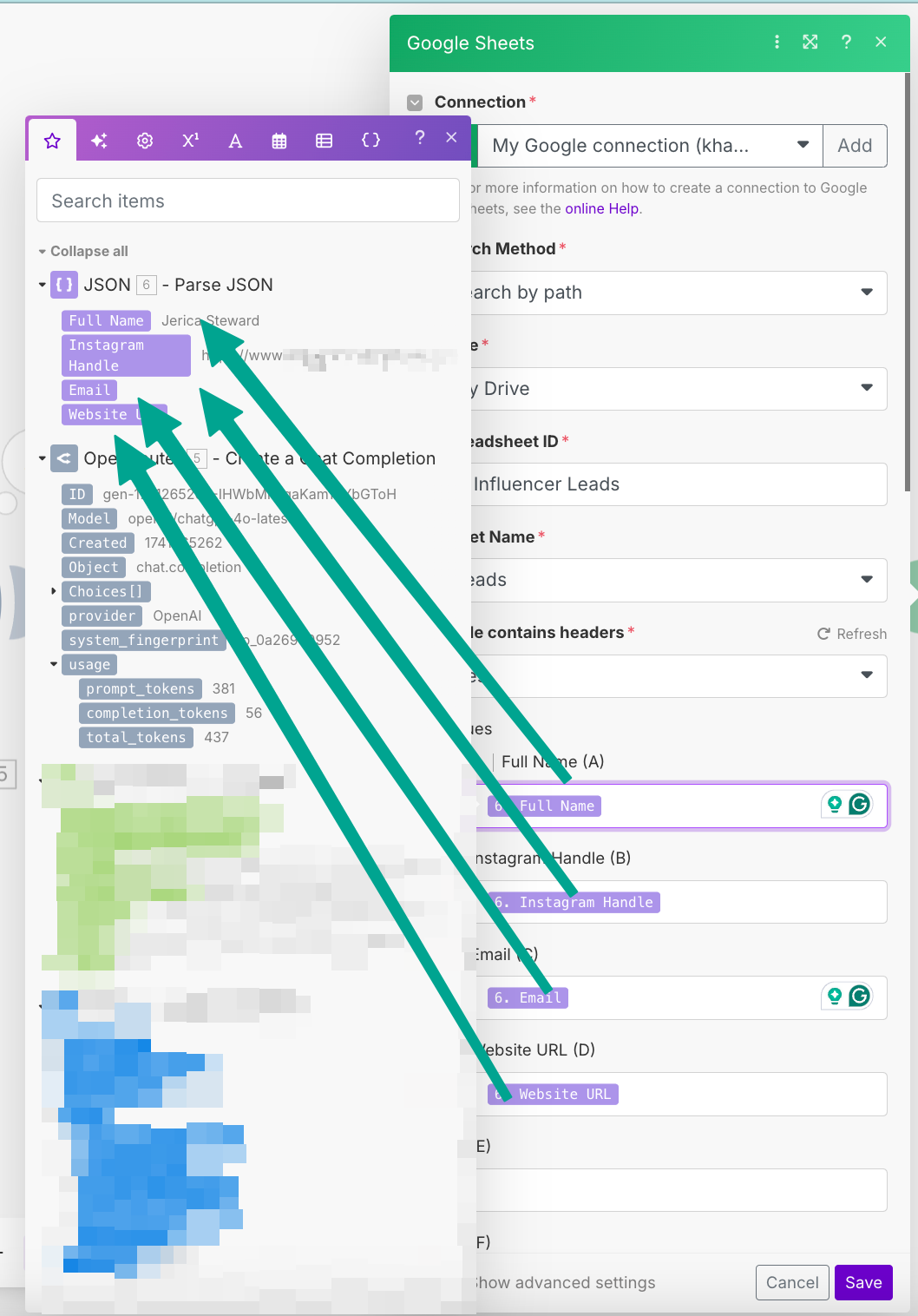

So the next module will be Google Sheets ➡️ Add a row, where we will take all our leads and their respective data points.

Our Google sheet is like the one below ⬇️

Here’s how the final connection with the Google sheet looks like in Make ⬇️

Now, since we don’t want any lead that has no email address, we will filter it. Here is the filter, that only adds to our Google Sheet if email exists.

Great!! Now we have the email addresses of our desired niche.

At this point you can either email all these leads!! Or further you can also create an automation that would send the emails to all these leads.

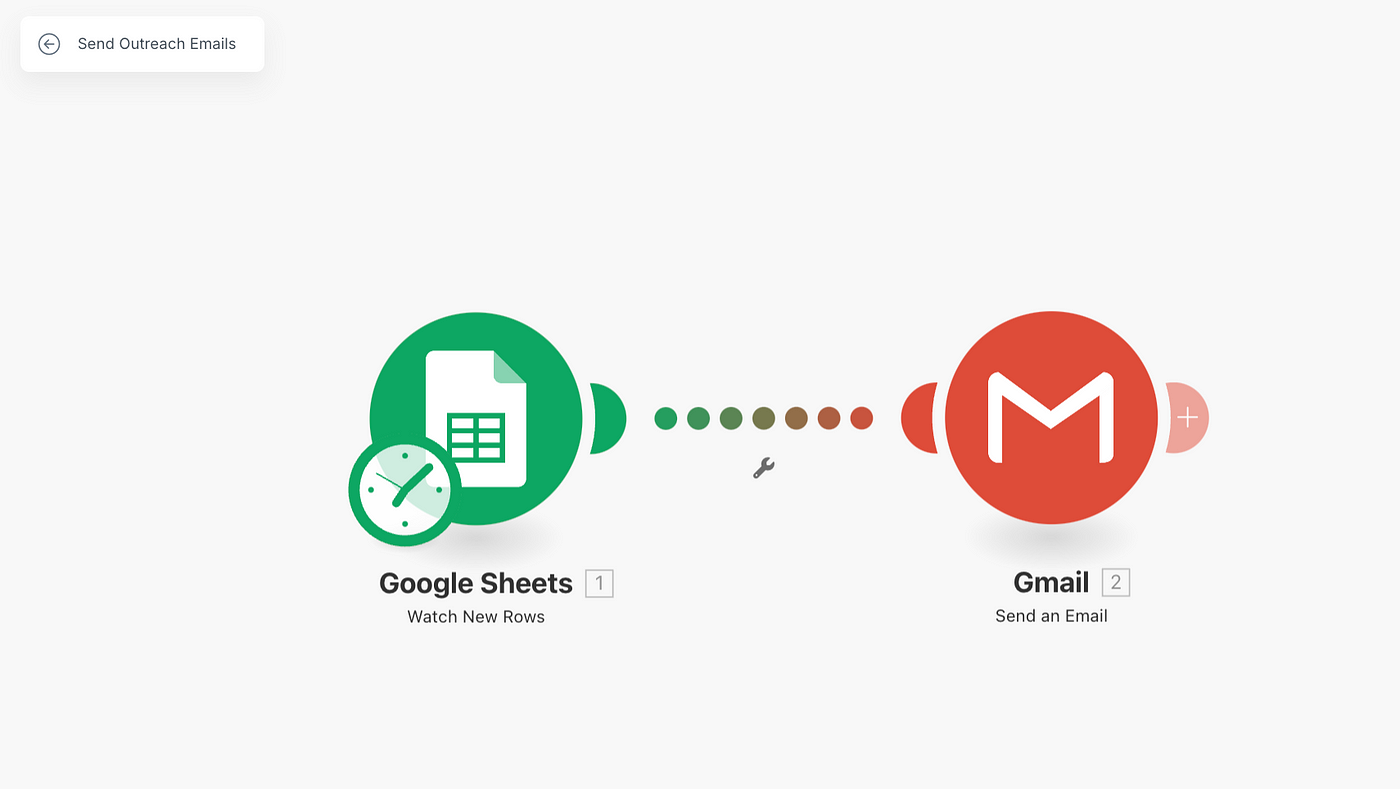

I have created that too, and that will be a very basic automation where you only need to connect 2 modules.

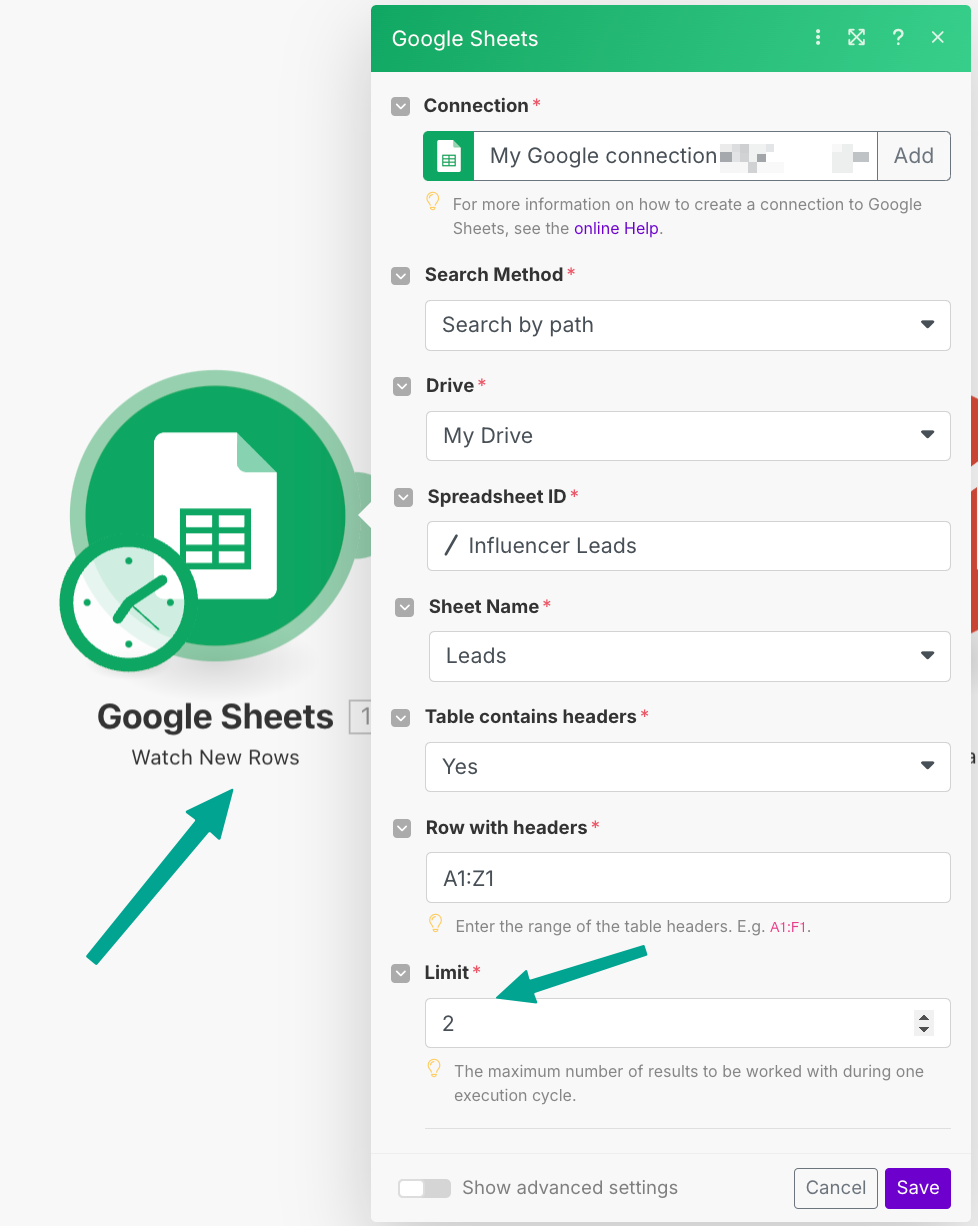

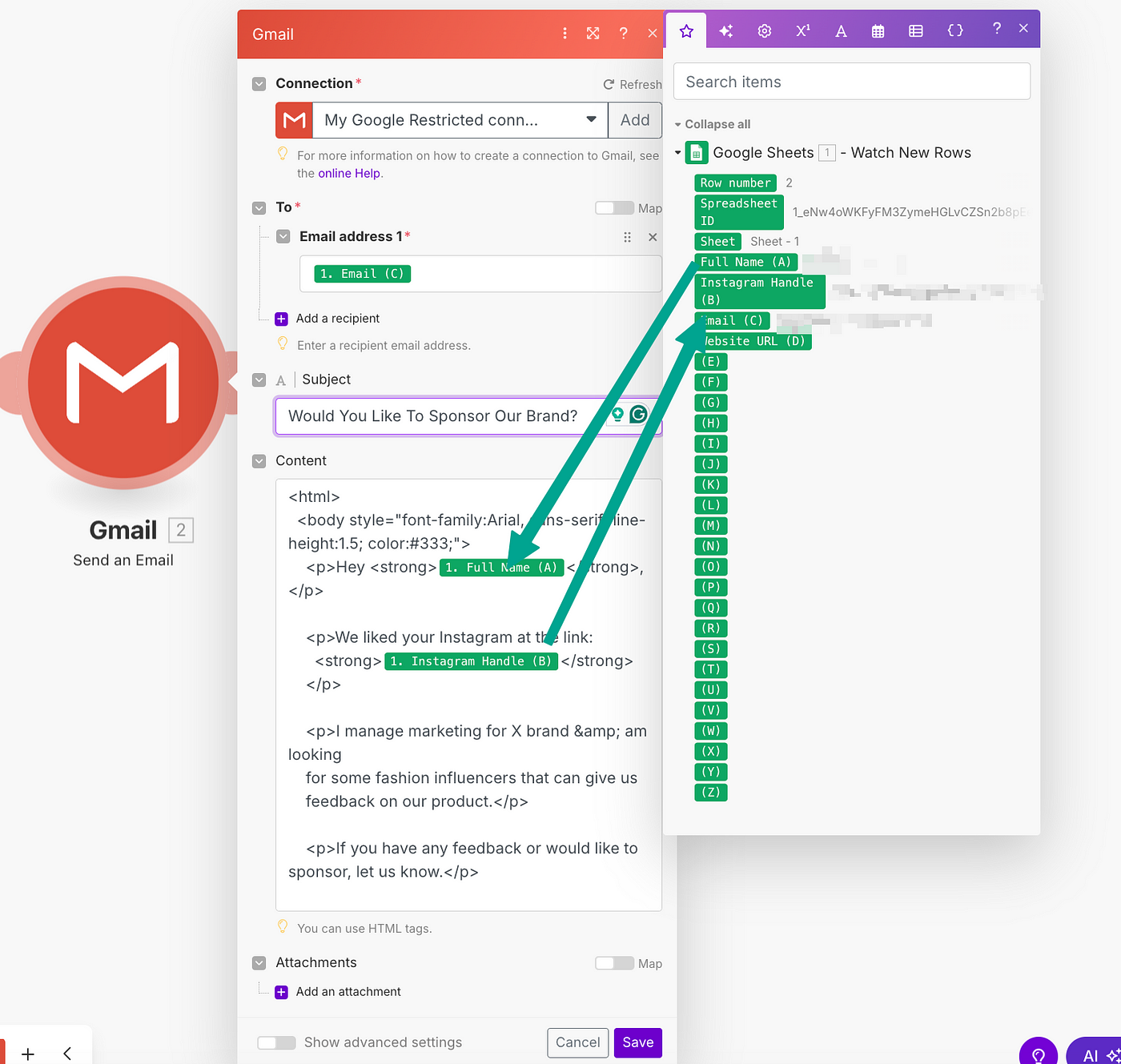

From the Google Sheets where we collected email, we are sending them emails to sponsor or give feedback for our fashion brand.

The trigger will be to watch new rows and keep the limit 2. Keep its run time one hour or more.

Every hour, two outreach emails will be sent. This scheduling strategy helps prevent your account from being flagged as spam and ensures messages go out even while you’re asleep.

Next, we will attach the email module. ⬇️

This pseudo email I am sending is on behalf of a fashion brand. You can adjust it accordingly for your niche.

Here is the blueprint for both scenarios: –

Additional Resources