TL;DR

- Python guide to scrape Booking.com hotel data (name, address, rating, room type, price, facilities) using

requests+BeautifulSoup. - Process: GET with headers → build soup → iterate table rows via

data-block-id→ persist room name → extract prices; full code included. - Fine for small runs; expect IP blocks at scale.

- For scale, switch to Scrapingdog—same parsing, reliable fetching, plus 1,000 free credits to start.

Web scraping offers a fast and efficient way to collect data from the internet. For the hotel industry, monitoring competitors’ pricing strategies is essential. As more hotels and OTAs flood the market, the competition is intensifying at an unprecedented pace.

So, how do you keep track of all these prices?

The answer is by scraping hotel prices. In this blog, we’ll learn how to scrape hotel prices from booking.com using Python.

Why use Python to Scrape booking.com

Python is the most versatile language and is used extensively with web scraping. Moreover, it has dedicated libraries for scraping the web.

With a large community, you might get your issues solved whenever you are in trouble. If you are new to web scraping with Python, I would recommend you to go through this guide comprehensively made for web scraping with Python.

Requirements

We need Python 3.x for this tutorial and I am assuming that you have already installed it on your computer, if not then you can download it from here. Along with that, you need to install two more libraries which will be used further in this tutorial for web scraping.

Requestswill help us to make an HTTP connection with Booking.com.BeautifulSoupwill help us to create an HTML tree for smooth data extraction.

Setup

First, create a folder and then install the libraries mentioned above.

mkdir booking

pip install requests

pip install beautifulsoup4

Inside this folder, create a Python file with any name you prefer. In this tutorial, we will scrape the following data points from the target website.

- Address

- Name

- Pricing

- Rating

- Room Type

- Facilities

Let’s Scrape Booking.com

Since everything is set let’s make a GET request to the target website and see if it works.

import requests

from bs4 import BeautifulSoup

l=list()

o={}

headers={"User-Agent":"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/107.0.0.0 Safari/537.36"}

target_url = "https://www.booking.com/hotel/us/the-lenox.html?checkin=2022-12-28&checkout=2022-12-29&group_adults=2&group_children=0&no_rooms=1&selected_currency=USD"

resp = requests.get(target_url, headers=headers)

print(resp.status_code)

The code is pretty straightforward and needs no explanation but let me explain you a little. First, we imported two libraries that we downloaded earlier in this tutorial then we declared headers and target URL.

After sending a GET request to the target URL, a 200 status code confirms success. Any other status code means the request did not go through as expected.

How to scrape the data points

Since we have already decided which data points we are going to scrape let’s find their HTML location by inspecting chrome.

For this tutorial, we will be using the find() and find_all() methods of BeautifulSoup to find target elements. DOM structure will decide which method will be better for each element.

Extracting hotel name and address

Let’s inspect Chrome and find the DOM location of the name as well as the address.

As you can see the hotel name can be found under the h2 tag with class pp-header__title. For the sake of simplicity let’s first create a soup variable with the BeautifulSoup constructor and from that, we will extract all the data points.

soup = BeautifulSoup(resp.text, 'html.parser')

Here BS4 will use an HTML Parser to convert a complex HTML document into a complex tree of Python objects. Now, let’s use the soup variable to extract the name and address.

o["name"]=soup.find("h2",{"class":"pp-header__title"}).text

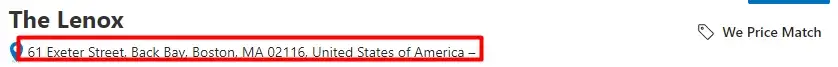

In a similar manner, we will extract the address.

The address of the property is stored under the div tag with the class name f17adf7576

o["address"]=soup.find_all("div",{"class":"f17adf7576"})[0].text.strip("\n")

Extracting rating and facilities

Once again we will inspect and find the DOM location of the rating and facilities element.

o["rating"]=soup.find_all("div",{"class":"ac4a7896c7"})[0].text

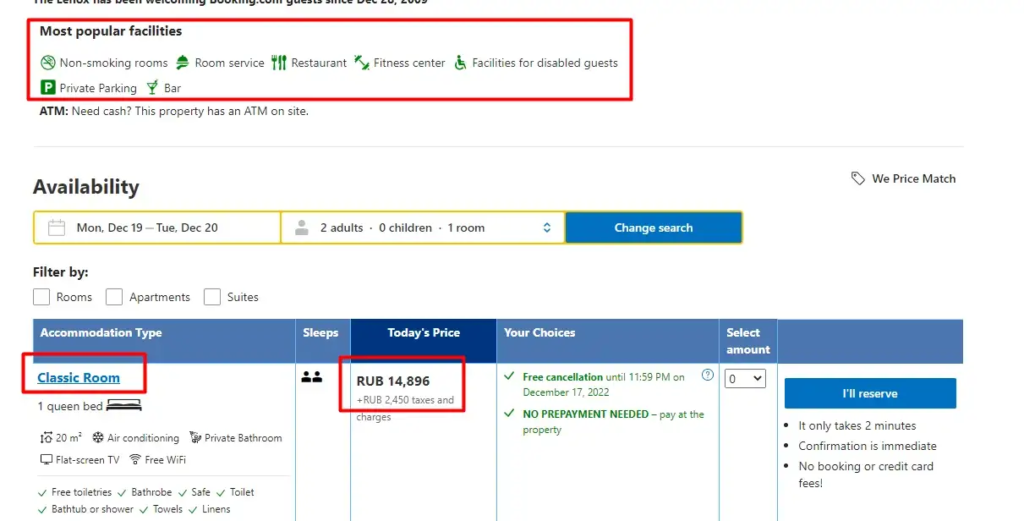

Extracting facilities is a bit tricky. We will create a list in which we will store all the facilities HTML elements. After that, we will run a for loop to iterate over all the elements and store individual text in the main array.

Let’s see how it can be done in two simple steps.

fac=soup.find_all("div",{"class":"important_facility"})

fac variable will hold all the facilities elements. Now, let’s extract them one by one.

for i in range(0,len(fac)):

fac_arr.append(fac[i].text.strip("\n"))

Extract Price and Room Types

This is the trickiest part of the entire tutorial. Booking.com’s DOM structure is quite intricate and requires careful inspection before you can reliably extract price and room type details.

The <tbody> tag contains all the relevant data. Inside it, each <tr> tag represents a row and holds all the information for a single listing or item, typically starting from the first column.

Next, as you dive deeper into the DOM, you’ll encounter multiple <td> tags within each <tr>. These <td> tags contain essential details like room type, pricing, taxes, and other booking information.

First, let’s find all the tr tags.

ids= list()

targetId=list()

try:

tr = soup.find_all("tr")

except:

tr = None

You’ll notice that each <tr> tag comes with a data-block-id attribute. The next step is to extract all these IDs and store them in a list for further processing.

for y in range(0,len(tr)):

try:

id = tr[y].get('data-block-id')

except:

id = None

if( id is not None):

ids.append(id)

Once you’ve gathered all the data-block-id values, the process becomes more manageable. We can loop through each data-block-id and directly access their corresponding <tr> blocks to extract key details such as room types and pricing information.

for i in range(0,len(ids)):

try:

allData = soup.find("tr",{"data-block-id":ids[i]})

except:

k["room"]=None

k["price"]=None

allData variable will store all the HTML data for a particular data-block-id.

Next, let’s navigate to the <td> elements located inside each <tr> block. Our initial focus will be on extracting the room type information, which is usually nested within a specific <td> cell inside each row.

try:

rooms = allData.find("span",{"class":"hprt-roomtype-icon-link"})

except:

rooms=None

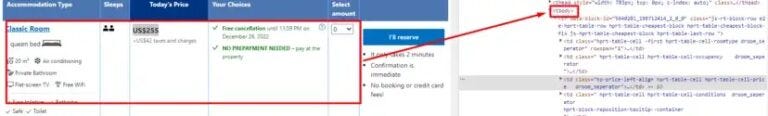

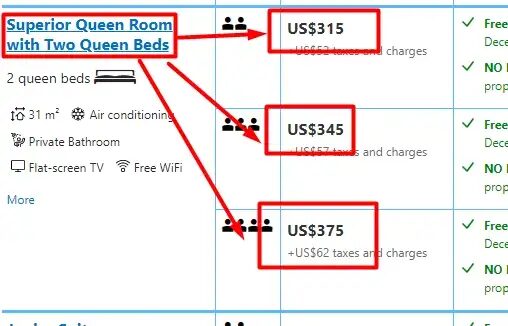

Here comes the tricky part: when a room type has multiple pricing options (e.g., refundable, non-refundable, breakfast included, etc.), you’ll notice that the room type is often mentioned once, but the subsequent price rows are linked to that same room type. So, while looping through each pricing option, we must ensure that the original room type is reused until a new room type is encountered. Let me illustrate this more clearly with the help of an image.

In this scenario, you’ll often encounter multiple pricing options listed under a single room type. When iterating through the rows, you’ll notice that the room type might only appear once, while the subsequent pricing rows leave the room name empty (or return None). To handle this, we simply retain the last non-empty room type value and reuse it for the following pricing options until a new room type is encountered. This ensures the pricing data stays correctly linked to its room type.

if(rooms is not None):

last_room = rooms.text.replace("\n","")

try:

k["room"]=rooms.text.replace("\n","")

except:

k["room"]=last_room

Here last_room will store the last value of rooms until we receive a new value.

Let’s extract the price now.

Price is stored under the div tag with the class “bui-price-display__value prco-text-nowrap-helper prco-inline-block-maker-helper prco-f-font-heading”. Let’s use allData variable to find it and extract the text.

price = allData.find("div",{"class":"bui-price-display__value prco-text-nowrap-helper prco-inline-block-maker-helper prco-f-font-heading"})

k["price"]=price.text.replace("\n","")

We have finally managed to scrape all the data elements that we were interested in.

Complete Code

You can extract other pieces of information like amenities, reviews, etc. You just have to make a few more changes and you will be able to extract them too. Along with this, you can extract other hotel details by just changing the unique name of the hotel in the URL.

The code will look like this.

import requests

from bs4 import BeautifulSoup

l=list()

g=list()

o={}

k={}

fac=[]

fac_arr=[]

headers={"User-Agent":"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/107.0.0.0 Safari/537.36"}

target_url = "https://www.booking.com/hotel/us/the-lenox.html?checkin=2022-12-28&checkout=2022-12-29&group_adults=2&group_children=0&no_rooms=1&selected_currency=USD"

resp = requests.get(target_url, headers=headers)

soup = BeautifulSoup(resp.text, 'html.parser')

o["name"]=soup.find("h2",{"class":"pp-header__title"}).text

o["address"]=soup.find_all("div",{"class":"f17adf7576"})[0].text.strip("\n")

o["rating"]=soup.find_all("div",{"class":"ac4a7896c7"})[0].text

fac=soup.find_all("div",{"class":"important_facility"})

for i in range(0,len(fac)):

fac_arr.append(fac[i].text.strip("\n"))

ids= list()

targetId=list()

try:

tr = soup.find_all("tr")

except:

tr = None

for y in range(0,len(tr)):

try:

id = tr[y].get('data-block-id')

except:

id = None

if( id is not None):

ids.append(id)

print("ids are ",len(ids))

for i in range(0,len(ids)):

try:

allData = soup.find("tr",{"data-block-id":ids[i]})

try:

rooms = allData.find("span",{"class":"hprt-roomtype-icon-link"})

except:

rooms=None

if(rooms is not None):

last_room = rooms.text.replace("\n","")

try:

k["room"]=rooms.text.replace("\n","")

except:

k["room"]=last_room

price = allData.find("div",{"class":"bui-price-display__value prco-text-nowrap-helper prco-inline-block-maker-helper prco-f-font-heading"})

k["price"]=price.text.replace("\n","")

g.append(k)

k={}

except:

k["room"]=None

k["price"]=None

l.append(g)

l.append(o)

l.append(fac_arr)

print(l)

Keep in mind, that this technique is good for small-scale scraping — perhaps a few hundred requests. However, once you cross that threshold, Booking.com will likely detect the pattern and block further requests due to IP bans.

To avoid this situation, you are advised to use a web scraping API like Scrapingdog.

Scraping Booking.com with Scrapingdog

The first step would be to sign up for the free pack. The free pack will provide you with 1000 API credits.

Click on General Scraper and enter your target booking.com link. This step will create a ready Python snippet on the right.

Now just copy this Python code and paste it into your working environment. Of course, your parsing code will remain the same as earlier.

import requests

from bs4 import BeautifulSoup

l=list()

g=list()

o={}

k={}

fac=[]

fac_arr=[]

headers={"User-Agent":"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/107.0.0.0 Safari/537.36"}

target_url = "https://www.booking.com/hotel/us/the-lenox.html?checkin=2025-12-28&checkout=2025-12-29&group_adults=2&group_children=0&no_rooms=1&selected_currency=USD"

resp = requests.get("https://api.scrapingdog.com/scrape", params={

'api_key': 'your-api-key',

'url': target_url,

'dynamic': 'false',

})

print(resp.status_code)

soup = BeautifulSoup(resp.text, 'html.parser')

o["name"]=soup.find("h2",{"class":"pp-header__title"}).text

o["address"]=soup.find_all("div",{"class":"f17adf7576"})[0].text.strip("\n")

o["rating"]=soup.find_all("div",{"class":"ac4a7896c7"})[0].text

fac=soup.find_all("div",{"class":"important_facility"})

for i in range(0,len(fac)):

fac_arr.append(fac[i].text.strip("\n"))

ids= list()

targetId=list()

try:

tr = soup.find_all("tr")

except:

tr = None

for y in range(0,len(tr)):

try:

id = tr[y].get('data-block-id')

except:

id = None

if( id is not None):

ids.append(id)

print("ids are ",len(ids))

for i in range(0,len(ids)):

try:

allData = soup.find("tr",{"data-block-id":ids[i]})

try:

rooms = allData.find("span",{"class":"hprt-roomtype-icon-link"})

except:

rooms=None

if(rooms is not None):

last_room = rooms.text.replace("\n","")

try:

k["room"]=rooms.text.replace("\n","")

except:

k["room"]=last_room

price = allData.find("div",{"class":"bui-price-display__value prco-text-nowrap-helper prco-inline-block-maker-helper prco-f-font-heading"})

k["price"]=price.text.replace("\n","")

g.append(k)

k={}

except:

k["room"]=None

k["price"]=None

l.append(g)

l.append(o)

l.append(fac_arr)

print(l)

With Scrapingdog you will be able to scrape millions of pages from booking.com without getting blocked.

Here are 5 Quick key takeaways 👇

Booking.com data can be scraped by first collecting hotel listing URLs from search results.

Individual hotel pages contain structured data like price, location, amenities, and ratings.

Reviews and guest feedback can be extracted using review endpoints and pagination.

Booking.com has anti-bot protections, so scraping at scale requires proper tools or APIs.

The data is useful for price monitoring, market research, and travel analysis.

Conclusion

Scraping booking.com using Python is a powerful way to collect hotel data like room types and pricing. However, scraping at scale often results in IP blocks and anti-bot challenges from booking.com. While this tutorial showed you how to get started with BeautifulSoup, handling large-scale scraping manually is a hassle.

This is where Scrapingdog comes in. It helps bypass restrictions and scrape millions of booking.com pages reliably, saving you time and infrastructure costs. So, when your Python scraper hits a wall, Scrapingdog keeps the data flowing smoothly.

I have scraped Expedia using Python here, Do check it out too!!

But scraping at scale would not be possible with this process. After some time booking.com will block your IP and your data pipeline will be blocked permanently. Ultimately, you will need to track and monitor prices for hotels when you will be scraping the hotel data.

Frequently Asked Questions (FAQs)

1. How can I scrape Booking.com hotel prices with Python?

You can scrape Booking.com hotel prices with Python, Requests, and BeautifulSoup. Fetch the hotel page, parse the HTML, and extract details like price, room type, hotel name, and rating.

2. What data can a Booking.com scraper collect?

A Booking.com scraper can collect hotel name, address, rating, room type, room price, and facilities. With extra parsing, it can also collect reviews and amenities.

3. Why does Booking.com block Python scrapers?

Booking.com blocks scrapers to prevent bot traffic and repeated automated requests. If you scrape too many pages from one IP, your script can hit rate limits or IP bans.

4. Is it legal to scrape Booking.com hotel prices?

Scraping publicly available hotel data is usually possible, but you should still check Booking.com’s terms and scrape responsibly. Avoid heavy request volumes or anything that could disrupt the platform.

Additional Resources

Here are a few additional resources that you may find helpful during your web scraping journey: