TL;DR

- Python guide to scrape Google Shopping; explains page structure and legality.

- Use Scrapingdog’s Google Shopping API to get JSON (

title,price,rating,reviews,source) with geo params. - Export results to

CSV—step-by-step code included. - Why API: it handles scraping + parsing and keeps pipelines stable at scale.

In an environment of cut-throat competition, it would be a bad idea not to include web scraping as a marketing and monitoring strategy to keep a check on your competitors. Extracting publicly available data provides competitive leverage and empowers you to make astute strategic decisions to expand your foothold in the market.

In this tutorial, we will be scraping Google Shopping Results using Python. We will also explore the benefits and solutions to the problems that might occur while gathering data from Google Shopping.

Why Scrape Google Shopping?

Google Shopping, formerly known as Google Product Search or Google Shopping Search, is used for browsing products from different online retailers and sellers for online purchases.

Consumers and retailers benefit from Google Shopping, making it a valuable e-commerce tool. Consumers can compare and select different ranges of products, which helps retailers by increasing their discoverability on the platform and potentially driving more sales.

Read More about it here.

Scraping Google Shopping is essential if you want to avail the following benefits:

Price Monitoring — Price scraping from Google Shopping to monitor the product from multiple sources and compare them to get the cheapest source to save customers money.

Product Information — Get detailed information about the products from Google Shopping, and compare their reviews and features with various other products to find the best among them.

Product Availability — You can use Google Shopping data to monitor the availability of a set of products instead of manually checking the product from different sources, which consumes your valuable time.

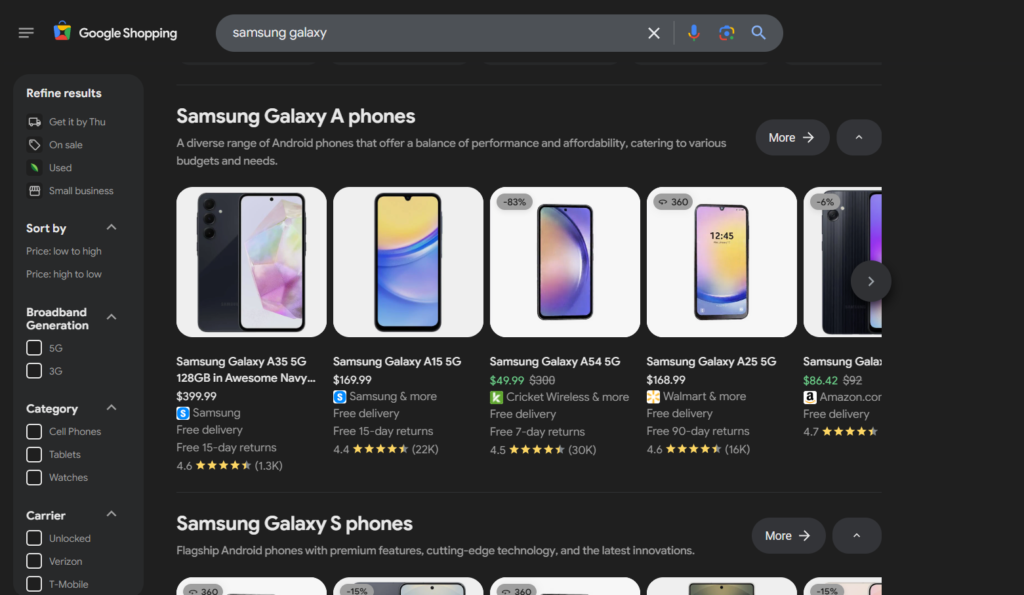

Understanding Google Shopping and Product Page

Before extracting data from the Google Shopping page, it is important to understand its structure. The below image includes all the data points for the Google search query “Samsung Galaxy”.

Search Page⬇️

Products List — It consists of the products from various online retailers relevant to the search query.

Filters — It allows you to refine search results based on color, size, rating, etc.

Sorting Options — It allows you to sort products based on pricing, customer ratings, etc.

Finally, the products under the list consist of titles, pricing, ratings, reviews, sources, and much more as data points.

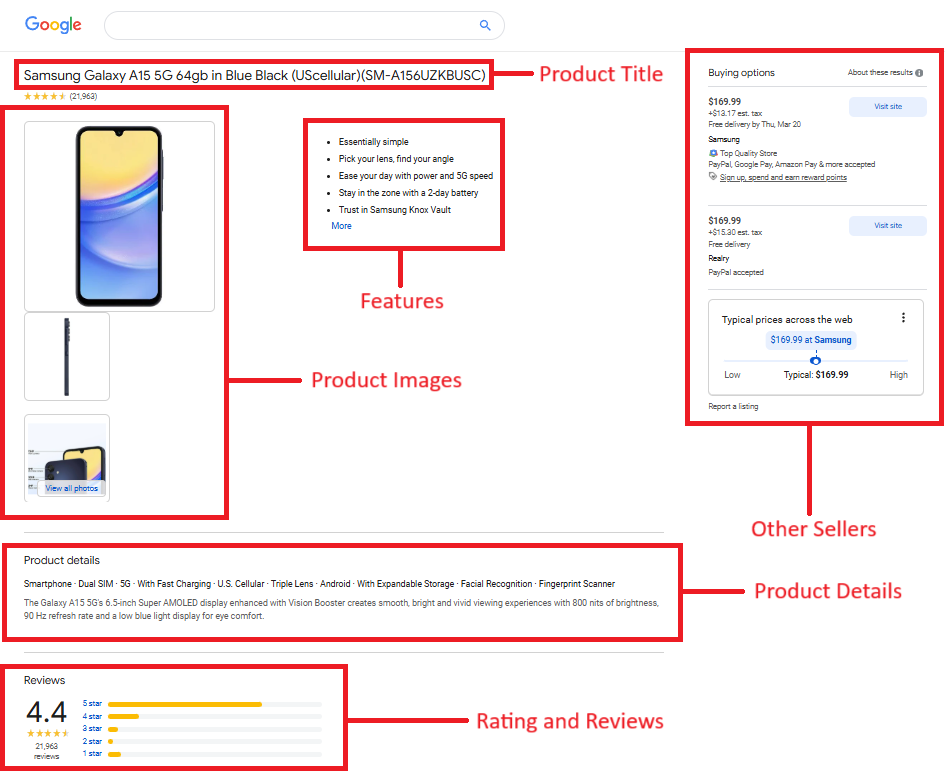

Product Page⬇️

Navigating the product page gives you detailed information about the product:

- Product Title

- Pricing

- Rating and Reviews

- Features

- Description

- Buying Options

Legality of Scraping Google Shopping

This is one of the most common questions asked by our customers and readers: Is scraping Google Shopping legal?

Yes, scraping publicly available data from Google Shopping is legal. However, its legality also depends on how the data is used. If the data is used for unethical purposes, there may be legal challenges.

You can read more about the legality of web scraping in this article.

Scraping Google Shopping results Using Scrapingdog Google Shopping API

Although scraping Google Shopping on a small scale is feasible, Google’s recent updates require advanced backend methods to extract data at scale. Moreover, even if you can obtain the HTML data, parsing it presents another major challenge — it is time-consuming and requires significant effort to maintain.

You might succeed in scraping at scale, but your data pipeline may break at irregular intervals, preventing a seamless web scraping experience. It is better to choose a reliable solution like Scrapingdog’s Google Shopping API, which handles both scraping and parsing, ensuring a stable and efficient data pipeline.

So, let’s begin with scraping Google Shopping using Scrapingdog.

Install Libraries

To scrape Google Shopping Results, we need to install an HTTP library so we can move forward.

So, before starting, we have to ensure that we have set up our Python project and installed our packages — Requests. You can install the packages from the above link or run the below command if you don’t want to read the documentation.

pip install requests

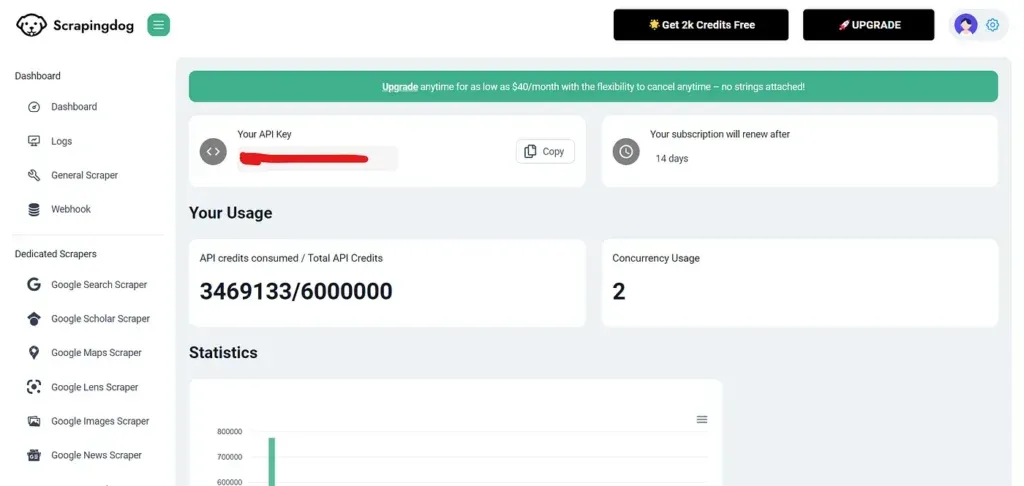

Get an API Key From Scrapingdog

As we are using Scrapingdog’s Google Shopping Scraper API for this tutorial, we would also need an API Key to extract the shopping results.

Getting an API Key from Scrapingdog is easy. You have to register on its website, and after that, you will be directed to its dashboard, where you will get your API Key.

Process

Search Page

It is a great practice to decide in advance which entities are required to scrape before starting anything. These are the following data points that we will cover in this tutorial:

- Title

- Rating

- Reviews

- Pricing

- Source

Since we have completed the setup, we will import our previously installed libraries and define the parameters to be passed with the API request.

import requests

from bs4 import BeautifulSoup

payload = {'api_key': 'APIKEY', 'query':'nike+shoes' , 'country':'us'}

resp = requests.get('https://api.scrapingdog.com/google_shopping', params=payload)

print (resp.text)

We have used only a few geolocation parameters. However, you can also add more parameters by reading this documentation to personalize the results. And don’t forget to put your API Key in the above code.

Run this program in your terminal to obtain the shopping search results.

"shopping_results": [

{

"title": "Samsung Galaxy S24 Ultra",

"product_link": "https://google.com/shopping/product/12202098061423044315",

"product_id": "12202098061423044315",

"scrapingdog_product_link": "https://api.scrapingdog.com/google_product?product_id=12202098061423044315&api_key=APIKEY&country=us",

"source": "Walmart",

"price": "$897.00",

"extracted_price": 897,

"old_price": "$1,300",

"old_price_extracted": 1300,

"rating": 4.7,

"reviews": "83K",

"delivery": "Free delivery",

"tag": "SAVE 30%",

"thumbnail": "https://encrypted-tbn1.gstatic.com/shopping?q=tbn:ANd9GcRISGAkYBOo98_hCccl4zO2d5qI2Bibp4eGRTl2RzwGXOc0U_DYaGg1pxHDJMHoRMo4jeKE9QnemEqDvdnhvMCDTIaw2PDueg",

"position": 1

},

{

"title": "Samsung Galaxy Z Fold6",

"product_link": "https://google.com/shopping/product/8175522653811384859",

"product_id": "8175522653811384859",

"scrapingdog_product_link": "https://api.scrapingdog.com/google_product?product_id=8175522653811384859&api_key=APIKEY&country=us",

"source": "Amazon.com - Seller",

"price": "$1,263.84",

"extracted_price": 1263.84,

"old_price": "$1,900",

"old_price_extracted": 1900,

"rating": 4.5,

"reviews": "38K",

"delivery": "Free delivery",

"tag": "SAVE 33%",

"thumbnail":"https://encrypted-tbn1.gstatic.com/shopping?q\=tbn:ANd9GcT6Q92jIWN6mdQ0Pa1fysIzHj-UWDMchic6_nirV-guvw9ePm1Dj1JXzSr3rhBZ3MMS9O8waJyo2eAOpYkMQwLOYJ5_4HSrnEiRvpX6dtmt9qiK-rx9AXQAnyw",

"position": 2

},

...

The results will include the search filters and the list of products as shown on the Google Shopping Page. However, the above response is not complete, as it is not possible to show the complete data here.

Here’s a small video go-through on how you can use Scrapingdog’s Google Shopping API ⬇️

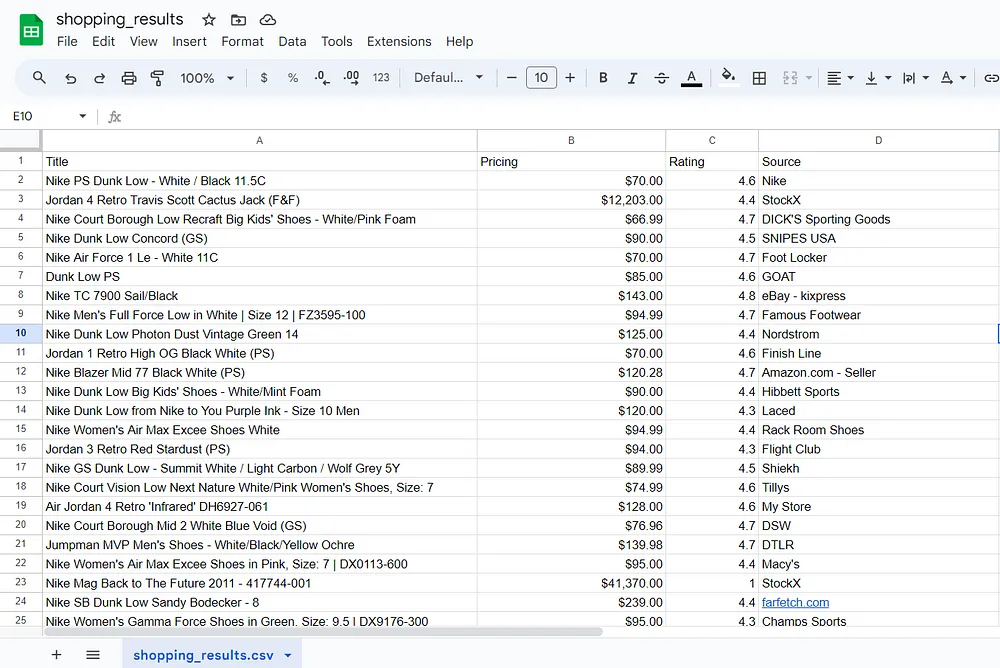

Exporting the list of products to CSV

Finally, we will export the list of products to a CSV file. We will get the product title, pricing, rating, and source from the extracted data. Here is the complete code to get the output in a CSV file.

import requests

import csv

payload = {'api_key': 'APIKEY', 'query':'nike+shoes' , 'country':'us'}

resp = requests.get('https://api.scrapingdog.com/google_shopping', params=payload)

data = resp.json()

with open('shopping_results.csv', 'w', newline='') as csvfile:

csv_writer = csv.writer(csvfile)

# Write the headers

csv_writer.writerow(["Title", "Pricing", "Rating", "Source"])

# Write the data

for result in data["shopping_results"]:

csv_writer.writerow([result["title"], result["price"], result["rating"], result["source"]])

print('Done writing to CSV file.')

After opening the CSV file shopping_results.csv we placed the headers title, pricing, rating, and source on the top. After that, we loop through each shopping result and write the corresponding data title, pricing, rating, and source to the CSV file using csv_writer.writerow.

Running this program will return you a CSV file as an output.

Product Page

Similarly, we can scrape product data, including the name, features, specifications, other sellers, and more, from the Google Product page.

Create another file in your project folder and paste the following code.

import requests

from bs4 import BeautifulSoup

payload = {'api_key': 'APIKEY', 'product_id':'6074184023219946909', 'country': 'us'}

resp = requests.get('https://api.scrapingdog.com/google_product', params=payload)

print(resp.text)

For more information on how to use Scrapingdog’s Google Product API, refer to this documentation.

Running this program, will give you product details just in a matter of 1–2 seconds. That’s how fast the API is.

{

"product_results": {

"title": "Samsung Galaxy A15 5G 64gb in Blue Black (UScellular)(SM-A156UZKBUSC)",

"product_id": "6074184023219946909",

"prices": [

"$169.99",

"$169.99"

],

"conditions": [

"New",

"New"

],

"typical_prices": {

"shown_price": "$169.99 at Samsung",

"shown_price_link": "https://www.samsung.com/us/smartphones/galaxy-a15/buy/%3FmodelCode%3DSM-A156UZKBUSC&opi=95576897&sa=U&ved=0ahUKEwjcyvnbifOLAxUDEFkFHYfmNcAQx50ICCE&usg=AOvVaw3O2tc_PBsCOAc4b0cucf_9"

},

"reviews": "21,165",

"rating": "4.4",

"features": [

"Essentially simple",

"Pick your lens, find your angle",

"Ease your day with power and 5G speed",

"Stay in the zone with a 2-day battery",

"Trust in Samsung Knox Vault",

"Essentially simple",

"Pick your lens, find your angle",

"Ease your day with power and 5G speed",

"Stay in the zone with a 2-day battery",

"Trust in Samsung Knox Vault",

"Unlock with your fingerprint"

],

.......

So, this is how you can access the shopping and product results without any hassle of maintaining the scraper with our API.

Conclusion

Google Shopping is the most trouble-free spot when attempting to retrieve data from multiple e-commerce sources. It aids in monitoring consumers and competitors, enabling data-driven, informed decisions, and helping your business in its growth efforts.

I hope this tutorial gave you a clear understanding of why it is beneficial for businesses to scrape shopping results.

If you are looking to scale this process you can always give us a try, and sign up for free. The first 1000 credits are on us to test it!!

Feel free to message us anything you need clarification on. Follow us on Twitter. Thanks for reading!

Additional Resources

- Web Scraping Amazon Data using Python

- Web Scraping eBay using Python

- Web Scraping Walmart using Python

- Scrape Myntra using Python

- Web Scraping Flipkart using Python

- How to scrape TikTok with Python

- How to Scrape X (Tweets & Profiles) Using Python

- Scrape Google Maps Data using Python

- Scrape Linkedin Profiles using Python

- Web Scraping Expedia using Python