Scraping Idealista can give you massive datasets that you need to drive business growth. Real Estate has become a crucial sector for any country around the globe and every decision is backed by some solid data analysis.

Now if we are talking about data, how should we collect so much data faster? Well, here web scraping can help you collect data.

In this tutorial, we are going to scrape the biggest real estate portal in Portugal Idealista. We are going to use Python for this tutorial & will create our own Idealista scraper.

Collecting all the Ingredients for Scraping Idealista

I am assuming that you have already installed Python on your machine. I will be using Python 3.x. With that being installed we will require two more libraries for data extraction.

- Selenium — It will be used for rendering the Idealista website.

- BeautifulSoup — It will be used to create an HTML tree for data parsing.

- Chromium — This is a webdriver that is used by Selenium for controlling Chrome. You can download it from here.

First, we need to create the folder where we will keep our script.

mkdir coding

Inside this folder, you can create a file by any name you like. I am going to use idealista.py in this case. Finally, we are going to install the above-mentioned libraries using pip.

pip install selenium pip install beautifulsoup4

Selenium is a browser automating tool, it will be used to load our target URL in a real chrome browser. BeautifulSoup aka BS4 will be used for clean data extraction from raw HTML returned by selenium.

We can also use the requests library here but Idealistia loves sending captchas and a normal HTTP GET request might block your request and will cause serious breakage to your data pipeline. In order to give Idealista a real browser vibe we are going ahead with selenium.

What we are going to scrape from Idealista?

I will divide this part into two sections. In the first section, we are going to scrape the first page from our target site, and then in the second section, we will create a script that can support pagination. Let’s start with the first section.

What data we are going to extract?

It is better to decide this in advance rather than deciding it when doing it live.

I have decided to scrape the following data points:

- Title of the property

- Price of the property

- Area Size

- Property Description

- Dedicated web link of the property.

We will first scrape the complete page using selenium and store the page source to some variable. Then we will create an HTML tree using BS4. Finally, we will use the find() and find_all() methods to extract relevant data.

Let’s scrape the page source first

We will be using selenium for this part. We will be using page_source driver method that will help us to get the source of the current page or the target page.

from bs4 import BeautifulSoup

from selenium import webdriver

import time

import schedule

PATH = 'C:\Program Files (x86)\chromedriver.exe'

l=list()

o={}

target_url = "https://www.idealista.com/venta-viviendas/torrelavega/inmobiliaria-barreda/"

driver=webdriver.Chrome(PATH)

driver.get(target_url)

time.sleep(5)

resp = driver.page_source

driver.close()

I first imported all the required libraries and then defined the location of our Chromium browser. Do remember to keep the version of chromium the same as your Chrome browser otherwise it will not run.

After this, I created a Chrome instance with the path where the driver is downloaded. With this command, we can perform multiple tests on the browser until you close the connection with .close() method.

Then I used .get() method to load the website. It not only loads the website but also waits until the website rendering is completed.

Finally, we extracted the data using the page_source method and closed the session using .close() method. .close() will disconnect the link from the browser. Now, we have the complete page data. Now, we can use BS4 to create a soup through which we can extract desired data using the .find() and .find_all() methods of BS4.

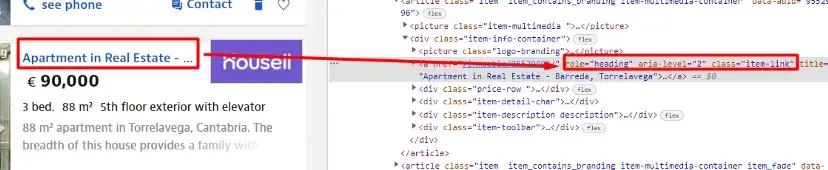

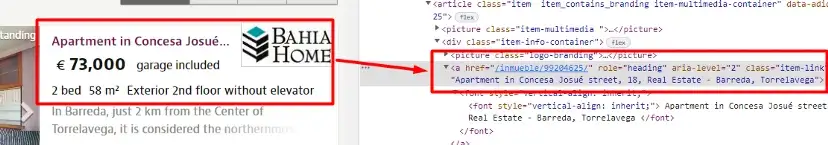

Scraping Title from Idealista Property Listing

Let’s first inspect and find the DOM element.

The title of the property is stored under a tag with a class item-link. This tag is nested inside a div tag with class item-info-container.

soup = BeautifulSoup(resp, 'html.parser')

allProperties = soup.find_all("div",{"class":"item-info-container"})

After closing the web driver session we created a page tree through which we will extract the text. For the sake of simplicity, we have stored all the properties as a list inside allProperties variable. Now, extracting titles and other data points will become quite easier for us.

Since there are multiple properties inside our allProperties variable we have to run a for loop in order to reach each and every property and extract all the necessary information from it.

for i in range(0,len(allProperties)):

o["title"]=allProperties[i].find("a",{"class":"item-link"}).text.strip("\n")

Object o will hold all the titles of all the properties once the for loop ends. Let’s scrape the remaining data points.

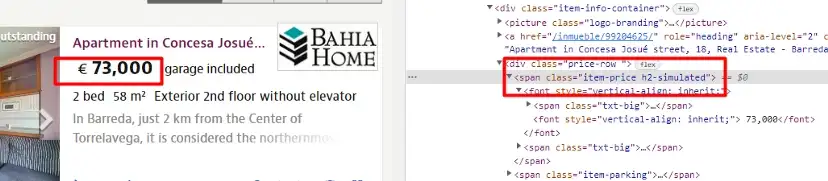

Scraping Property Price

Let’s inspect and find the location of this element inside the DOM.

Price is stored inside span tag of the class item-price. We will use the same technique that we used for scraping the title. Inside for loop, we will use the below-given code.

o["price"]=allProperties[i].find("span",{"class":"item-price"}).text.strip("\n")

This will extract all the prices one by one.

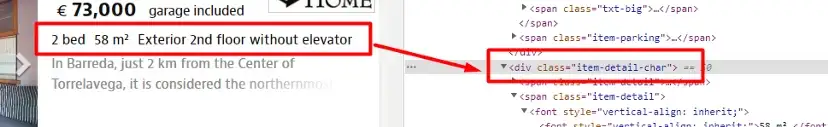

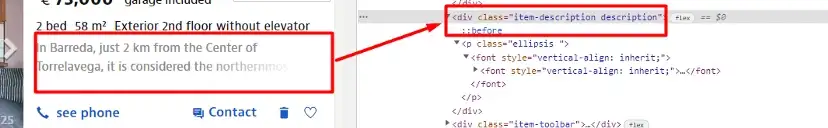

Scraping Area Size and Description

Now, you must have an idea of how we are going to extract this data. Let’s find the location of each of these data elements.

The area size is stored inside the div tag with the class “item-detail-char”.

o["area-size"]=allProperties[i].find("div",{"class":"item-detail-char"}).text.strip("\n")

The property description can be found inside the div tag with the class “item-description”.

Dedicated Property Url

With the same technique, you can scrape dedicated links as well. Let’s find its location.

Link is stored inside a tag with href attribute. This is not a complete URL so we will add a pretext. Here we will use the .get() method of BS4 to get the value of an attribute.

o["property-link"]="https://www.idealista.com"+allProperties[i].find("a",{"class":"item-link"}).get('href')

Here we have added https://www.idealista.com as a pretext because we will not find the complete URL inside the href tag.

We have managed to scrape all the data we were interested in.

Complete Code

You can make a few more changes to extract a little more information like the number of properties, map, etc. But the current code will look like this.

from bs4 import BeautifulSoup

from selenium import webdriver

import time

PATH = 'C:\Program Files (x86)\chromedriver.exe'

l=list()

o={}

target_url = "https://www.idealista.com/venta-viviendas/torrelavega/inmobiliaria-barreda/"

driver=webdriver.Chrome(PATH)

driver.get(target_url)

time.sleep(7)

resp = driver.page_source

driver.close()

soup = BeautifulSoup(resp, 'html.parser')

allProperties = soup.find_all("div",{"class":"item-info-container"})

for i in range(0,len(allProperties)):

o["title"]=allProperties[i].find("a",{"class":"item-link"}).text.strip("\n")

o["price"]=allProperties[i].find("span",{"class":"item-price"}).text.strip("\n")

o["area-size"]=allProperties[i].find("div",{"class":"item-detail-char"}).text.strip("\n")

o["description"]=allProperties[i].find("div",{"class":"item-description"}).text.strip("\n")

o["property-link"]="https://www.idealista.com"+allProperties[i].find("a",{"class":"item-link"}).get('href')

l.append(o)

o={}

print(l)

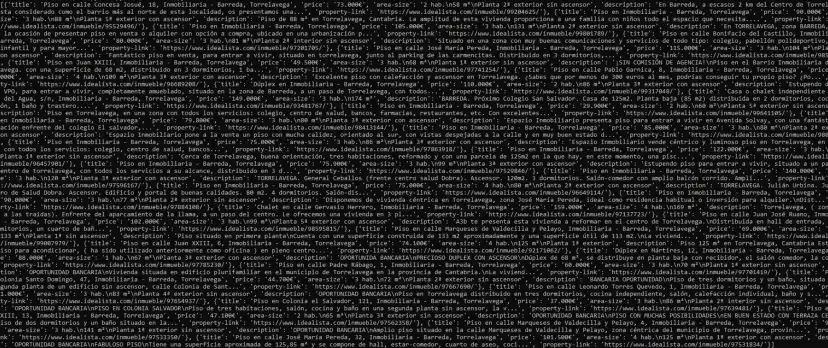

Once you print the response will look like this.

Let’s move on to the second section of this tutorial where we will create pagination support as well. With this, we will be able to crawl over all the pages available for a particular location.

Scraping all the Pages

You must have noticed one thing each page has 30 properties. With this information, you can get the total number of pages each location has. Of course, you will first have to scrape the total number of properties any location has.

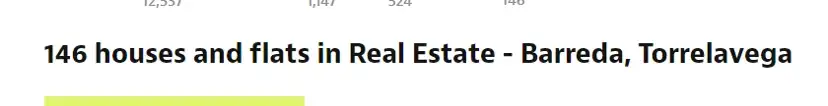

The current target page has 146 properties. We have to scrape this number and then divide it by 30. That number will be the total number of pages. So, let’s scrape this number first.

As you can see this number is inside a string. We have to scrape this string and then use .split() function of Python to break the string into a list. Then the first element of the list will be our desired element because 146 is the first character inside the sentence.

This string is stored inside a div tag with the class “listing-title”. Let’s extract it.

totalProperties = int(soup.find("div",{"class":"listing-title"}).text.split(" ")[0])

totalPages = round(totalProperties/30)

by using the int() method I have converted the string to an integer. Then we divided it by 30 to get the total number of pages. Now, let’s check how the URL pattern changes when the page number changes.

When you click on page number two, the URL will look like this — https://www.idealista.com/venta-viviendas/torrelavega/inmobiliaria-barreda/pagina-2.htm

So, it adds a string “pagina-2.htm” when you click on page two. Similarly, when you click on page three you get “pagina-3.htm”. We just need to change the target URL by just adding this string according to the page number we are on. We will use for loop for this.

from bs4 import BeautifulSoup

from selenium import webdriver

import time

PATH = 'C:\Program Files (x86)\chromedriver.exe'

l=list()

o={}

target_url = "https://www.idealista.com/venta-viviendas/torrelavega/inmobiliaria-barreda/"

driver=webdriver.Chrome(PATH)

driver.get(target_url)

time.sleep(7)

resp = driver.page_source

driver.close()

soup = BeautifulSoup(resp, 'html.parser')

totalProperties = int(soup.find("div",{"class":"listing-title"}).text.split(" ")[0])

totalPages = round(totalProperties/30)

allProperties = soup.find_all("div",{"class":"item-info-container"})

for i in range(0,len(allProperties)):

o["title"]=allProperties[i].find("a",{"class":"item-link"}).text.strip("\n")

o["price"]=allProperties[i].find("span",{"class":"item-price"}).text.strip("\n")

o["area-size"]=allProperties[i].find("div",{"class":"item-detail-char"}).text.strip("\n")

o["description"]=allProperties[i].find("div",{"class":"item-description"}).text.strip("\n")

o["property-link"]="https://www.idealista.com"+allProperties[i].find("a",{"class":"item-link"}).get('href')

l.append(o)

o={}

for x in range(2,totalPages+1):

target_url = "https://www.idealista.com/venta-viviendas/torrelavega/inmobiliaria-barreda/pagina-{}.htm".format(x)

driver=webdriver.Chrome(PATH)

driver.get(target_url)

time.sleep(7)

resp = driver.page_source

driver.close()

soup = BeautifulSoup(resp, 'html.parser')

allProperties = soup.find_all("div",{"class":"item-info-container"})

for i in range(0,len(allProperties)):

o["title"]=allProperties[i].find("a",{"class":"item-link"}).text.strip("\n")

o["price"]=allProperties[i].find("span",{"class":"item-price"}).text.strip("\n")

o["area-size"]=allProperties[i].find("div",{"class":"item-detail-char"}).text.strip("\n")

o["description"]=allProperties[i].find("div",{"class":"item-description"}).text.strip("\n")

o["property-link"]="https://www.idealista.com"+allProperties[i].find("a",{"class":"item-link"}).get('href')

l.append(o)

o={}

print(l)

After extracting data from the first page, we are running a for loop to get the new target URL and extract the data from it in the same fashion. Once you print the list l you will get complete data.

Finally, we have managed to scrape all the pages. This data can be used for making important decisions like buying or renting a property.

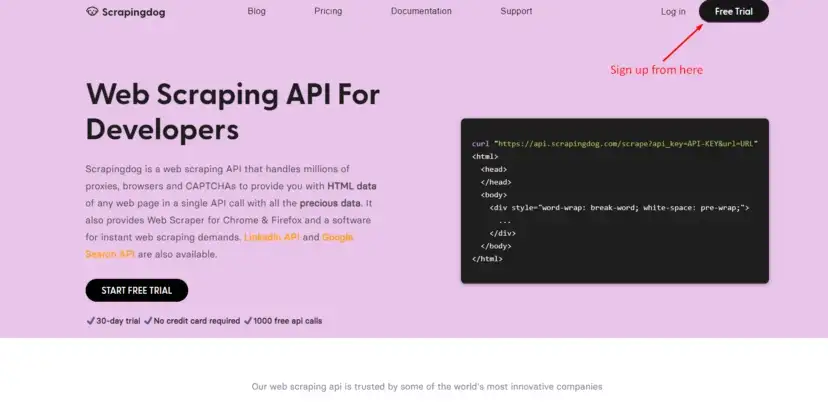

Using Scrapingdog for web scraping Idealista

So, we have seen how you can scrape Idealista using Python. But to be very honest, idealista.com is a well-protected site and you cannot extract data at scale using only Python. In fact, after 10 or 20 odd requests Idealista will detect scraping and ultimately will block your scrapers.

After that, you will continue to get 403 errors on every web request you make. How to avoid this? Well for this I would suggest you go with an API for web scraping. Scrapingdog uses its large pool of proxies to overcome any challenges you might face while extracting data at scale.

Scrapingdog provides free 1000 API calls for all new users and in that pack, you can use all the premium features. First, you need to signup to get your own private API key.

You can find your API key at the top of the dashboard. You just have to make a few changes to the above code and Scrapingdog will be able to handle the rest of the things. You don’t need Selenium or any other web driver to scrape it. You just have to use the requests library to make a GET request to the Scrapingdog API.

from bs4 import BeautifulSoup

import requests

l=list()

o={}

target_url = "https://www.idealista.com/venta-viviendas/torrelavega/inmobiliaria-barreda/"

resp = requests.get("https://api.scrapingdog.com/scrape?api_key=Your-API-KEY&url={}&dynamic=false".format(target_url))

soup = BeautifulSoup(resp.text, 'html.parser')

totalProperties = int(soup.find("div",{"class":"listing-title"}).text.split(" ")[0])

totalPages = round(totalProperties/30)

allProperties = soup.find_all("div",{"class":"item-info-container"})

for i in range(0,len(allProperties)):

o["title"]=allProperties[i].find("a",{"class":"item-link"}).text.strip("\n")

o["price"]=allProperties[i].find("span",{"class":"item-price"}).text.strip("\n")

o["area-size"]=allProperties[i].find("div",{"class":"item-detail-char"}).text.strip("\n")

o["description"]=allProperties[i].find("div",{"class":"item-description"}).text.strip("\n")

o["property-link"]="https://www.idealista.com"+allProperties[i].find("a",{"class":"item-link"}).get('href')

l.append(o)

o={}

print(totalPages)

for x in range(2,totalPages+1):

target_url = "https://www.idealista.com/venta-viviendas/torrelavega/inmobiliaria-barreda/pagina-{}.htm".format(x)

resp = requests.get("https://api.scrapingdog.com/scrape?api_key=Your-API-KEY&url={}&dynamic=false".format(target_url))

soup = BeautifulSoup(resp.text, 'html.parser')

allProperties = soup.find_all("div",{"class":"item-info-container"})

for i in range(0,len(allProperties)):

o["title"]=allProperties[i].find("a",{"class":"item-link"}).text.strip("\n")

o["price"]=allProperties[i].find("span",{"class":"item-price"}).text.strip("\n")

o["area-size"]=allProperties[i].find("div",{"class":"item-detail-char"}).text.strip("\n")

o["description"]=allProperties[i].find("div",{"class":"item-description"}).text.strip("\n")

o["property-link"]="https://www.idealista.com"+allProperties[i].find("a",{"class":"item-link"}).get('href')

l.append(o)

o={}

print(l)

We have removed Selenium because we no longer need that. Do not forget to replace “Your-API-KEY” section with your own API key. You can find your key on your dashboard. This code will provide you with an unbreakable data stream. Apart from this the rest of the code will remain the same.

Just like this Scrapingdog can be used for scraping any website without getting BLOCKED.

Conclusion

In this blog, we understood how you can use Python to scraper Idealista, which is data rich real-estate website that needs no introduction. Further, we saw how Idealista can block your scrapers and to overcome you can use Scrapingdog’s web scraping API.

Note: – We have recently updated our API to scrape Idealista more efficiently, resulting in reduced response times. The faster data extraction not only allows for the collection of more data but also decreases the load on server threads.

I hope you like this little tutorial and if you do then please do not forget to share it with your friends and on social media.

Additional Resources

Here are a few additional resources you may find resourceful. We have scraped other real estate websites that are below: –