You don’t need to know coding to scrape LinkedIn jobs at scale!!

If you’ve found your way to this article, chances are you’re not a developer but eager to learn how to scrape a website and seamlessly store its JSON data into Airtable.

What is Airtable– It’s a digital platform that acts like a supercharged spreadsheet, making it easy to organize and store data.

Quickly now, I will run through the steps you need to follow along. We will be using Scrapingdog’s LinkedIn Jobs API & will save the data in Airtable without coding anywhere in between!!

Requirements

Process of saving the data

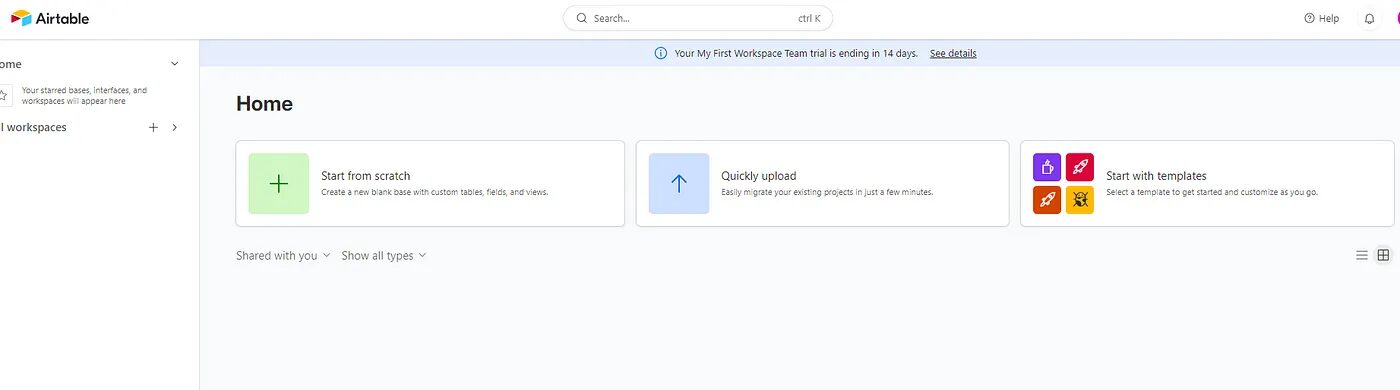

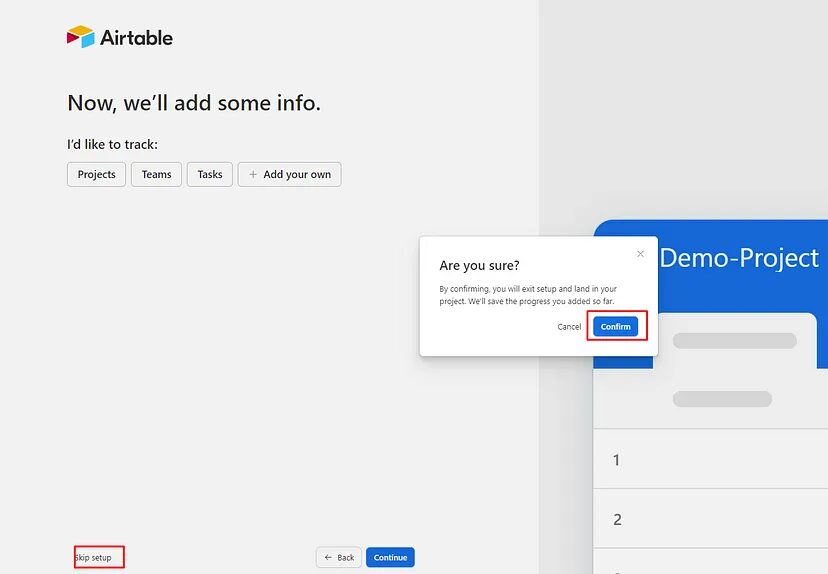

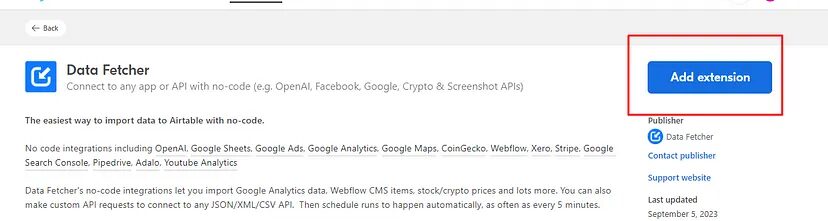

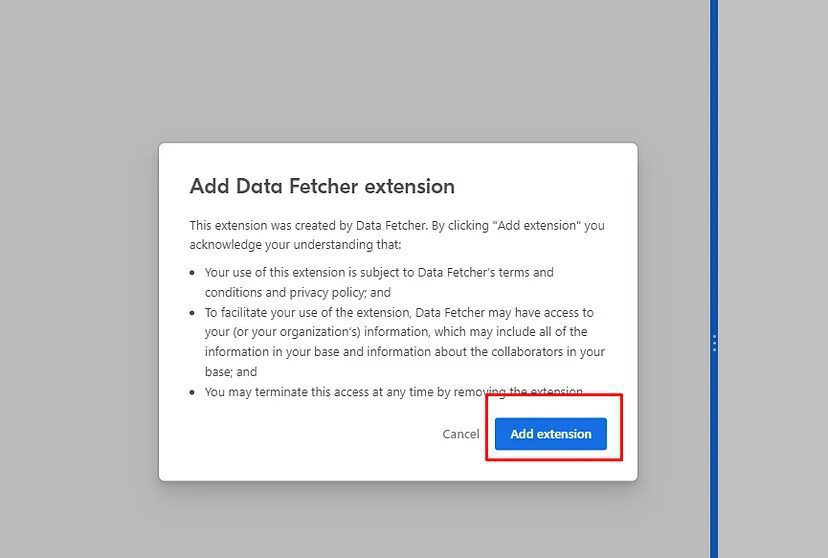

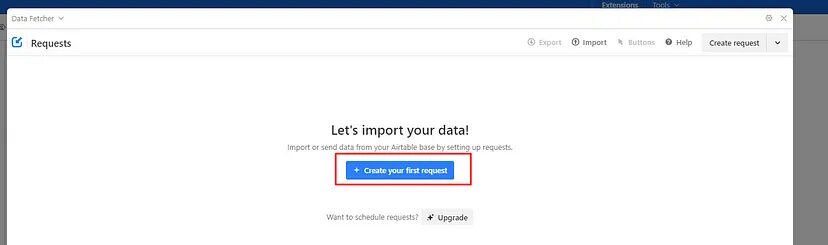

Quickly Install this extension to your Chrome browser. This extension is Data Fetcher and it can help you import data from Scrapingdog’s API directly to Airtable. Isn’t that fantastic?

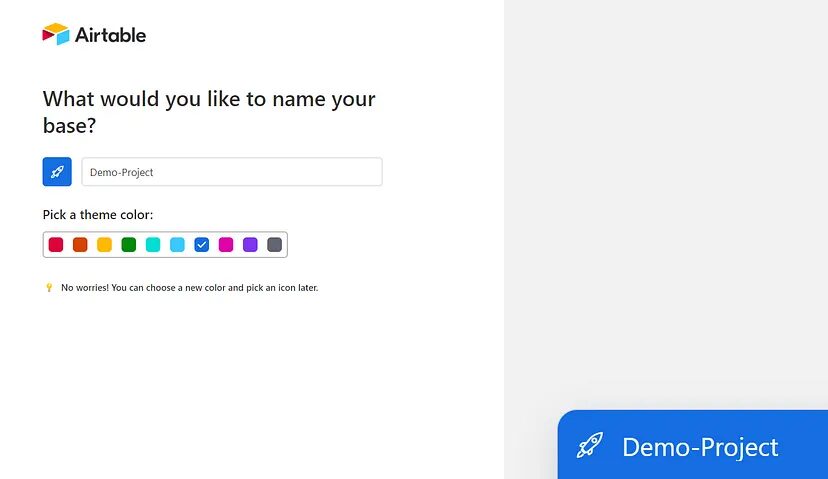

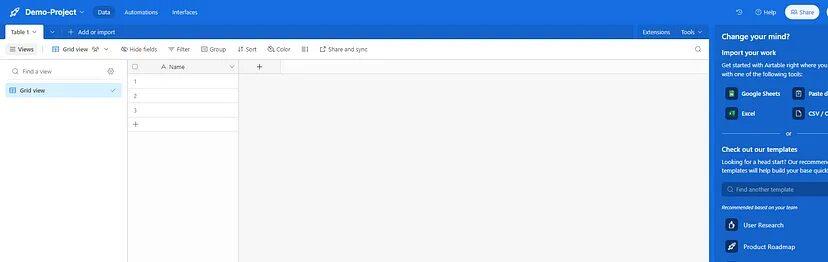

After clicking on Add extension you will be redirected to your project page on Airtable. Now, click on Add extension again.

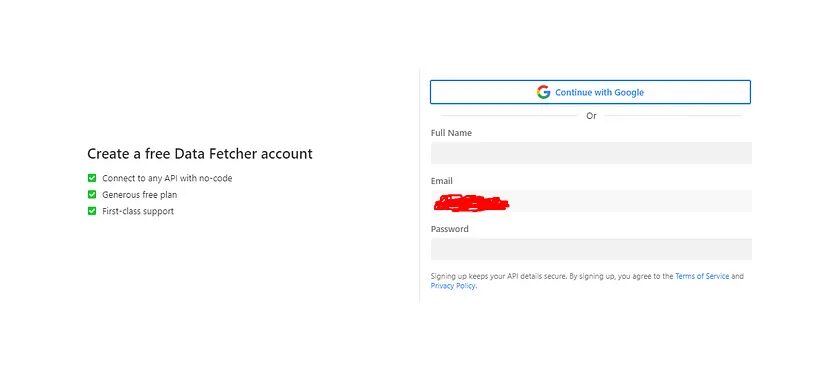

Now, you will be asked to create an account on Data Fetcher.

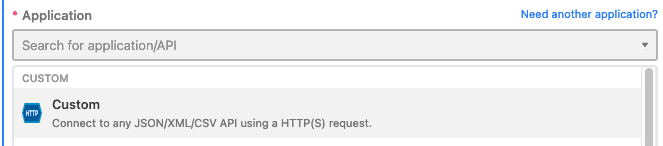

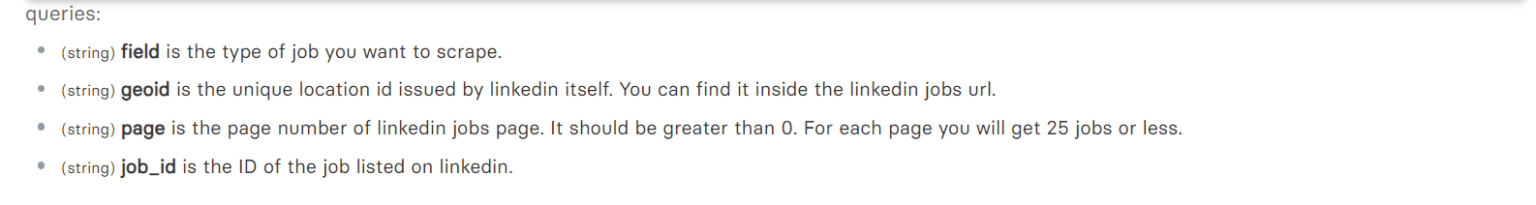

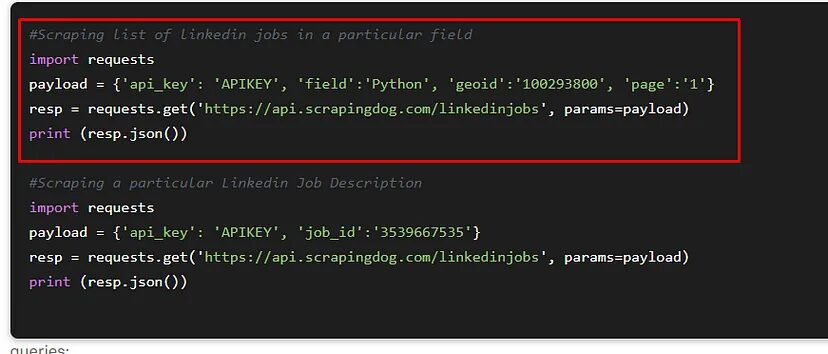

Now, we can start scraping. I would request you go through the documentation of LinkedIn Jobs API. This will give you an

idea about the API. How it works and what data it needs to return the response. Below I am attaching the image from the

documentation, wherein you can see what inputs you need to give and what they are.

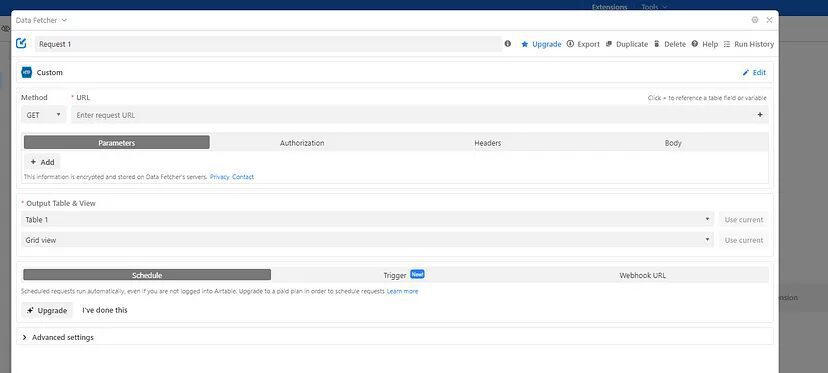

http://api.scrapingdog.com/linkedinjobs?page=1&geoid=101473624&field=python&api_key=Your-API-Key

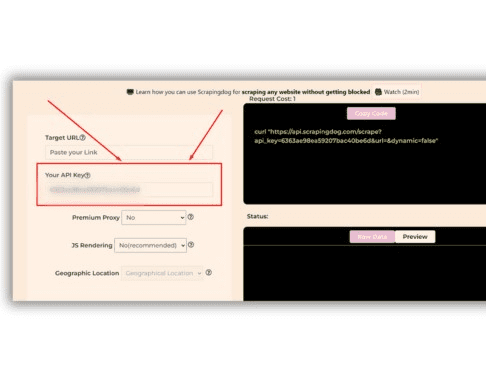

But before that, in “http://api.scrapingdog.com/linkedinjobs?page=1&geoid=101473624&field=python&api_key=Your-API-Key”, see the last part “Your-API-Key”, here you have to paste your own API key.

You can find your API key on the dashboard of Scrapingdog. (please refer image)

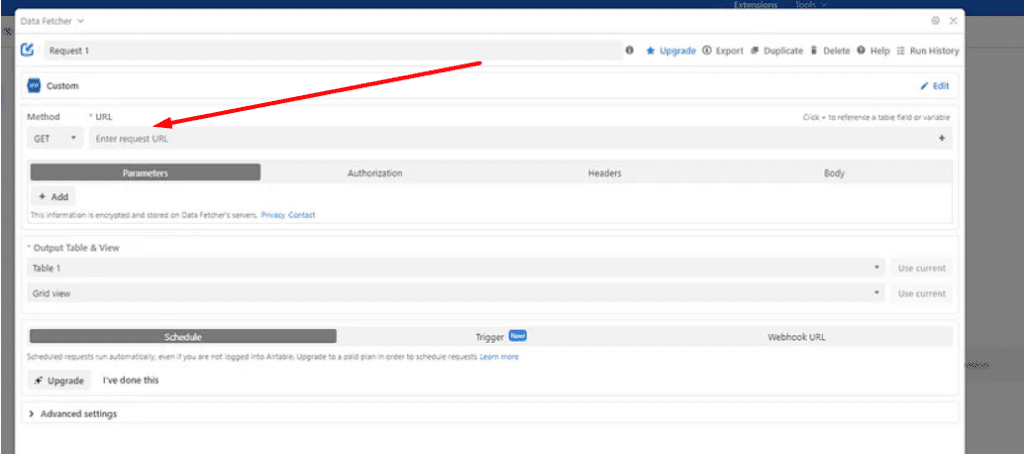

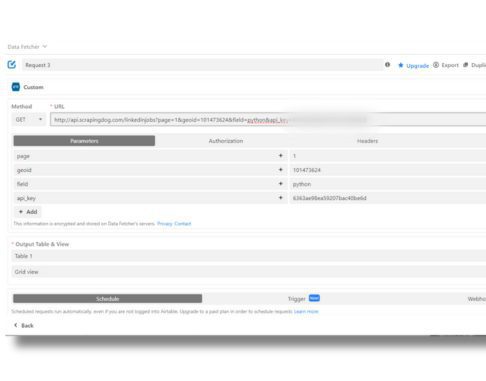

Now, paste the API link inside the Data Fetcher box.

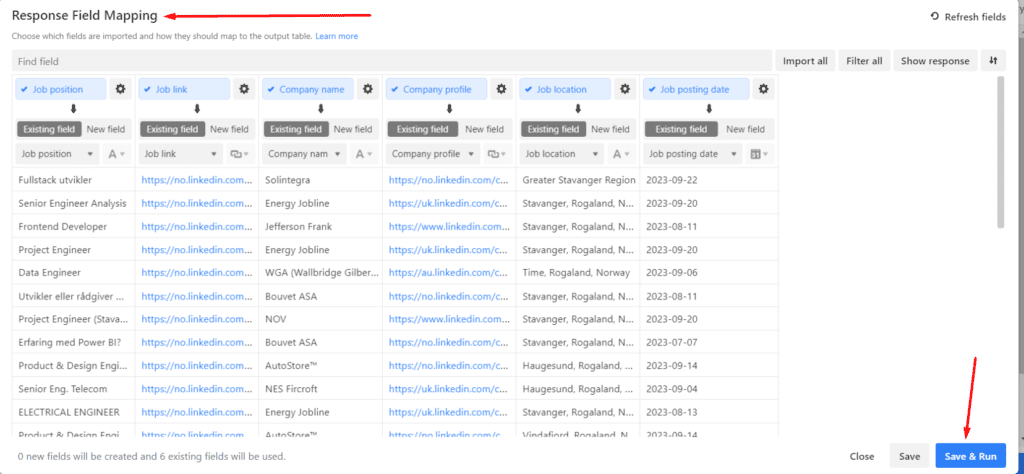

Once done click on Save and Run from the bottom right. After that a tab will appear with the name “Response field Mapping”, you need to click Save & Run again here. (please refer screenshot below)

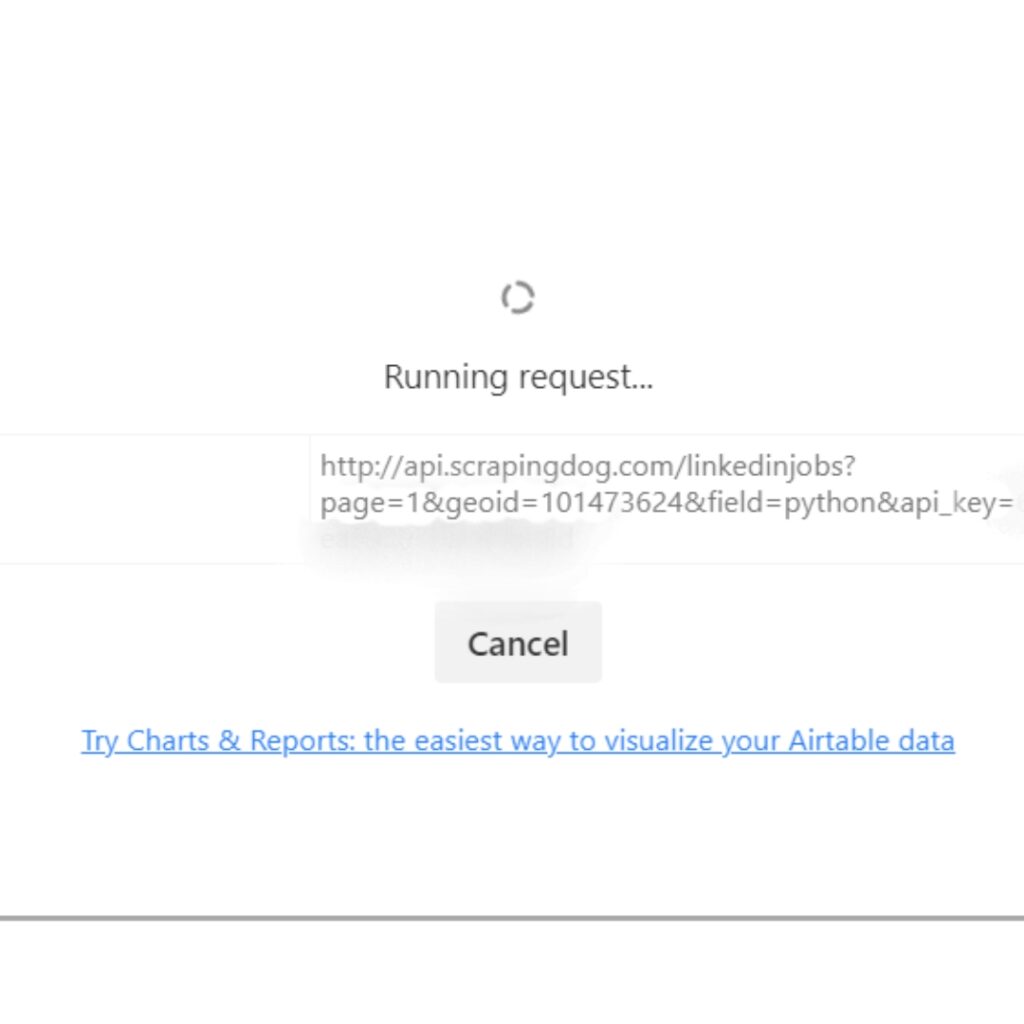

Data Fetcher will request data from scrapingdog’s API

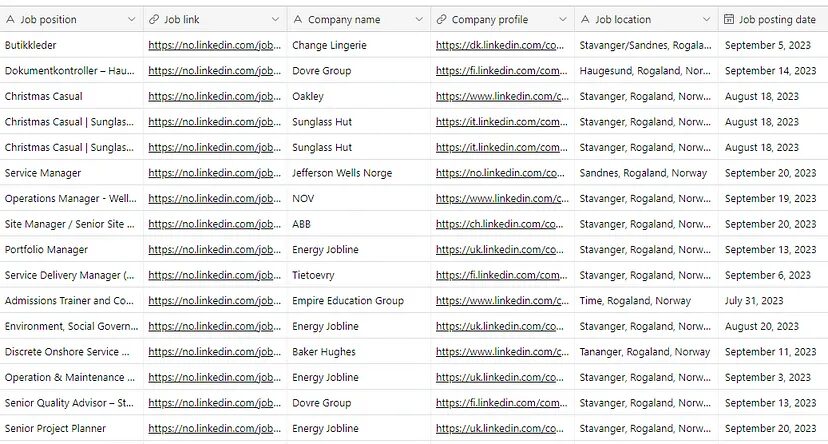

The JSON result from Scrapingdog API has been saved inside the table. You can find this data inside your Airtable project too.

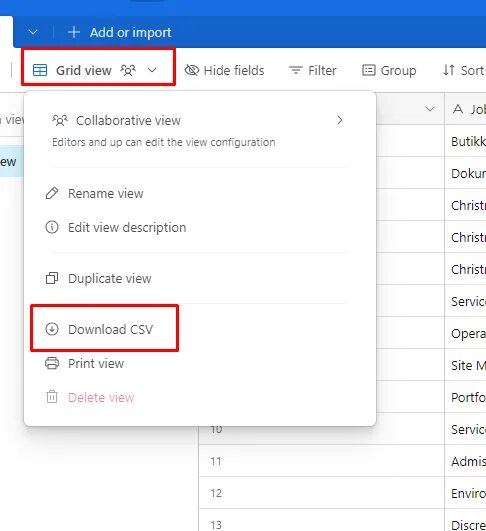

If you want to download this data in a CSV file then click on “Grid view” on the top left and then click “download CSV”.

This will download all of your results in CSV format.

Conclusion

In this journey, we’ve ventured into the world of web scraping and data automation without the need for coding skills. By harnessing the power of Scrapingdog’s LinkedIn Jobs API, we’ve unlocked a treasure trove of JSON data from LinkedIn, and then seamlessly funneled it into Airtable for organized storage and analysis. What seemed like a complex task has now become accessible to non-coders, empowering them to gather valuable insights, monitor job trends, or curate data for their projects.

Of course, you can use any API to store data inside Airtable using the Data Fetcher extension. Data Fetcher works great by creating a bridge between the data collection process and non-coders.

If you want to learn more about this extension then you should refer to their documentation. For more such tutorials keep visiting our Blog section. We will be releasing more such content so keep your heads up.

Additional Resources

And there’s the list! At this point, you should feel comfortable writing your first web scraper to gather data from any website. Here are a few additional resources that you may find helpful during your web scraping journey:

Web Scraping with Scrapingdog