TL;DR

- Python tutorial using

requests+BeautifulSoupto scrape Yellow Pages restaurant listings (name, phone, address). - Follows each listing’s detail page to capture website and email.

- Produces a structured list you can save to

CSV, handy for local lead generation.

Scraping Yellow Pages to get data from it or to generate leads is a great idea for businesses looking for prospects in the local area. Yellow Pages have the largest pool of data for businesses it is always great to scrape it to know how in the local area for your business.

Now, the question is how you will generate leads and extract data to get the emails or contact numbers of these prospects.

In this blog, we are going to create a Yellow Pages scraper using Python to get phone numbers and other details.

Let’s Scrape Yellow Pages for Leads

Let’s assume you are a kitchen utensil manufacturer or a dealer and you are searching for potential buyers for your product. Since restaurants could be one of your major targets we are going to scrape restaurant details from the Yellow Pages.

We are more interested in the phone number, address and obviously the name of the restaurant. We will target restaurants in New York. Consider this URL as our target URL.

We are going to use Python to get data from Yellow Pages and I am assuming that you already have Python installed. Further, we are going to use libraries like requests and beautifulsoup to execute this task.

Know More: A Detailed Tutorial on Web Scraping with Python!!

So, the first task is to create a folder and install these libraries.

>> mkdir yellopages

>> pip install requests

>> pip install beautifulsoup4

Everything is set now, let’s code. To begin with, you have to create a file, you can name it anything you like. I will use ypages.py. In that file, we will import the libraries we just installed.

import requests

from bs4 import BeautifulSoup

data=[]

obj={}

The next part would be to declare the target website for scraping from the yellow pages.

target_website = "https://www.yellowpages.com/new-york-ny/restaurants"

resp = requests.get(target_website).textWe have made the GET request to our target website using the requests library. We will use BS4 on the data stored in resp variable to create an HTML tree from where we can extract our data of interest.

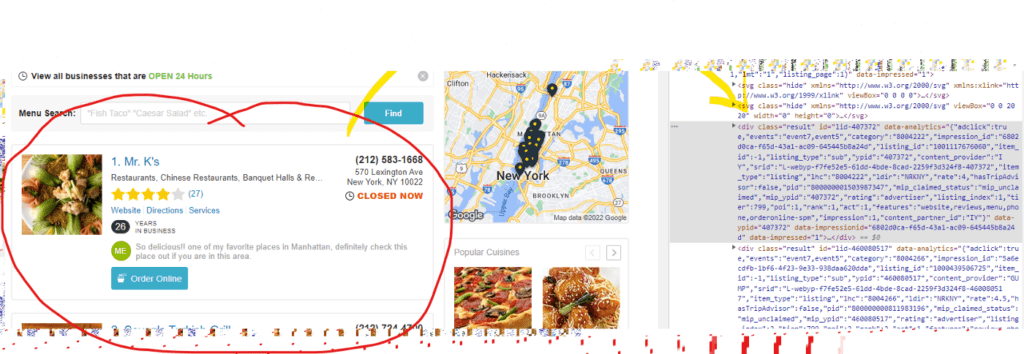

First, inspect the webpage to check where all these results are stored.

As we can see all these results are stored under div tag with the class result. We will find the name, address, and phone number inside this div so let’s find the location of those too.

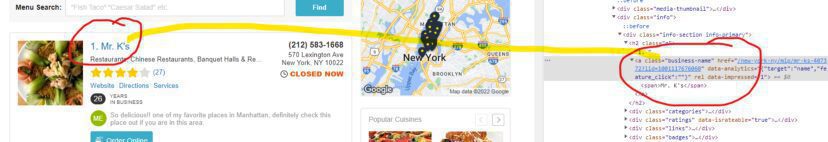

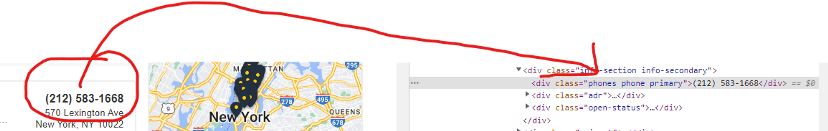

The name is stored under a tag with the class name as “business-name”. Next, we will check for phone numbers.

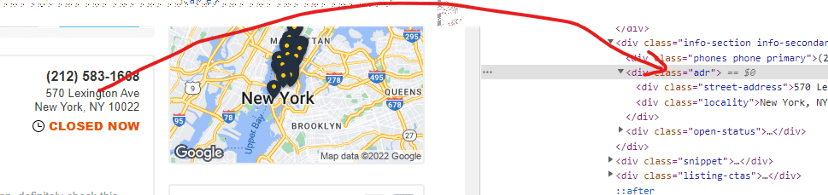

The last one will be the address.

The address is stored under the div tag with the class name as adr. Since we have all the information we need to extract the data, let’s run a for loop to extract details from these results one by one.

for i in range(0,len(allResults)):

try:

obj["name"]=allResults[i].find("a",{"class":"business-name"}).text

except:

obj["name"]=None

try:

obj["phoneNumber"]=allResults[i].find("div",{"class":"phones"}).text

except:

obj["phoneNumber"]=None

try:

obj["address"]=allResults[i].find("div",{"class":"adr"}).text

except:

obj["address"]=None

data.append(obj)

obj={}

print(data)

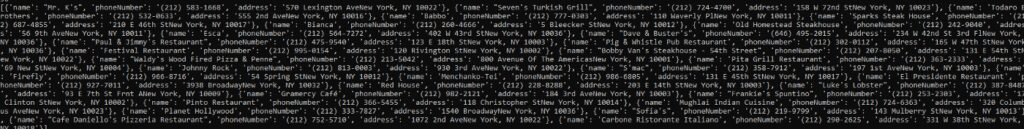

We are using try and except blocks in case of any errors. This for loop will help us to reach each and every result and all the data will be stored inside the array data. Once you print you will get this.

We have successfully scraped all the target data. Now, what if you want to scrape the email and website of the Restaurant as well?

For that, you have to open all the dedicated pages of each restaurant and then extract them. Let’s see how it can be done.

Scraping Emails & Website Address of Businesses from Yellow Pages

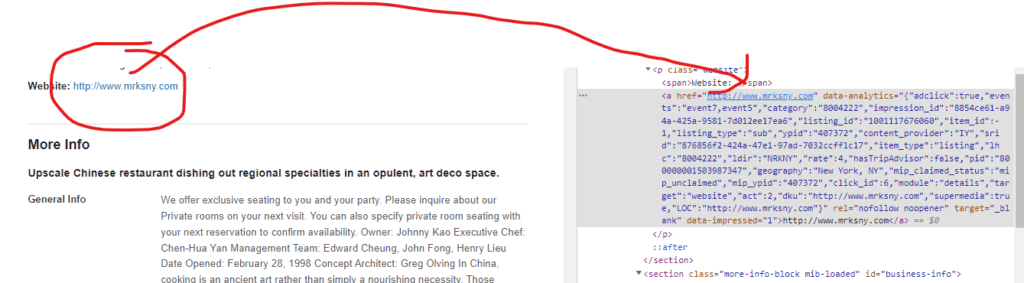

Let’s first check the location of emails and website addresses for the particular restaurant in Yellow Pages.

The website is stored under a tag inside href attribute.

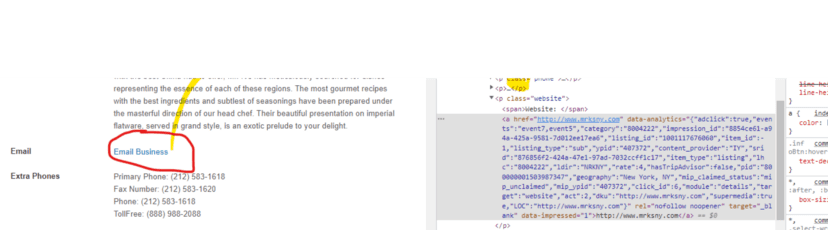

You can find the email inside href attribute with a tag.

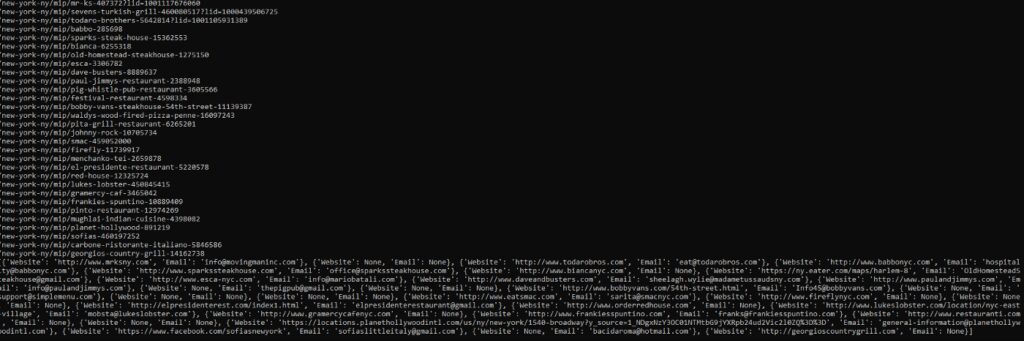

Our logic to scrape all these details will be to create a new target website for each restaurant. For example, Mr. K’s restaurant has a dedicated URL https://www.yellowpages.com/new-york-ny/mip/mr-ks-407372?lid=1001117676060.

The part until .com/ will remain the same but the string after that will change according to the restaurant. We can find these strings on the main page.

Here are the steps we are going to do in order to scrape our data of interest.

- We will extract lateral strings from the main page.

- We will make a GET request to the new URL.

- Extract emails and websites from this new URL.

- Repeat for every result on the main page.

We will make some changes inside the last for loop.

for i in range(0,len(allResults)):

try:

lateral_string=allResults[i].find("a",{"class":"business-name"}).get('href')

except:

lateral_string=None

target_website = 'https://www.yellowpages.com{}'.format(lateral_string)

print(lateral_string)

resp = requests.get(target_website).text

soup=BeautifulSoup(resp, 'html.parser')

Our new target_website will be a link to the dedicated restaurant page. Then we are going to extract data from these pages.

for i in range(0,len(allResults)):

try:

lateral_string=allResults[i].find("a",{"class":"business-name"}).get('href')

except:

lateral_string=None

target_website = 'https://www.yellowpages.com{}'.format(lateral_string)

print(lateral_string)

resp = requests.get(target_website).text

soup=BeautifulSoup(resp, 'html.parser')

try:

obj["Website"]=soup.find("p",{"class":"website"}).find("a").get("href")

except:

obj["Website"]=None

try:

obj["Email"]=soup.find("a",{"class":"email-business"}).get('href').replace("mailto:","")

except:

obj["Email"]=None

data.append(obj)

obj={}

print(data)

.get() function of BS4 will help us to extract data from any attribute. Once we print it we get all the emails and website URLs stored inside an array object.

Now, you can use these prospects for cold emailing or can do cold calling.

Read More: How to Extract Email Addresses from any Website using Python

Complete Code

import requests

from bs4 import BeautifulSoup

data=[]

obj={}

target_website = "https://www.yellowpages.com/new-york-ny/restaurants"

resp = requests.get(target_website)

soup=BeautifulSoup(resp.text, 'html.parser')

allResults = soup.find_all("div",{"class":"result"})

for i in range(0,len(allResults)):

try:

lateral_string=allResults[i].find("a",{"class":"business-name"}).get('href')

except:

lateral_string=None

target_website = 'https://www.yellowpages.com{}'.format(lateral_string)

print(lateral_string)

resp = requests.get(target_website).text

soup=BeautifulSoup(resp, 'html.parser')

try:

obj["Website"]=soup.find("p",{"class":"website"}).find("a").get("href")

except:

obj["Website"]=None

try:

obj["Email"]=soup.find("a",{"class":"email-business"}).get('href').replace("mailto:","")

except:

obj["Email"]=None

data.append(obj)

obj={}

print(data)

Here are some Key Takeaways:

Yellow Pages holds structured business information like names, addresses, phone numbers, and categories.

Crawling Yellow Pages isn’t straightforward due to pagination, dynamic loading, and anti-bot protections.

Scraping at scale requires managing proxies, request throttling, and CAPTCHA challenges.

Extracted business data can power lead lists, market research, and local analytics.

Using a dedicated scraping API simplifies large-scale Yellow Pages data extraction without heavy infrastructure.

Conclusion

We learned how you can create a target prospect list for your company. Using this approach or the code you can scrape emails and phone numbers for any industry you like. As I said YellowPages is a data-rich website and can be used for multiple purposes.

Collecting prospects is not limited to just Yellowpages only, you can scrape Google as well to get some qualified leads. Of course, you will need an advanced web scraper to extract data from that. For that, you can always use Scrapingdog’s Web Scraping API which offers a generous free 1000 calls to new users.

I hope you will like this tutorial and if so then please do not hesitate to share it online. Thanks! again for reading.

Frequently Asked Questions

This totally depends on what you are extracting from the yellow pages. However, one of the best ways to do it at scale is by using a web scraper API. Using an API allows you to extract data at a pace and without any blockage.

It might, since it has anti-scraping measures to detect any activity that is done to extract data from it. Hence, it is advisable to do scraping in a way that yellow pages can’t detect it. You can read tips to avoid being blocked from websites while scraping.

Additional Resources

Here are a few other resources you can read: –