TL;DR

- Explains crawling vs scraping; start from a seed URL and follow links recursively to collect pages.

- Builds a mini crawler in Python with

requests+BeautifulSoup(Books to Scrape demo). - Scales up with

Scrapy(CrawlSpider+ link rules) and exports results to JSON. - Scaling tips: use proxies (Scrapingdog datacenter proxy; 1k free credits), tune delays / concurrency, and respect

robots.txt.

Web crawling is a technique by which you can automatically navigate through multiple URLs and collect a tremendous amount of data. You can find all the URLs of multiple domains and extract information from them.

This technique is mainly used by search engines like Google, Yahoo, and Bing to rank websites and suggest results to the user based on the query one makes.

In this article, we are going to first understand the main difference between web crawling and web scraping. This will help you create a thin line in your mind between web scraping and web crawling.

Before we go in and create a full-fledged web crawler I will show you how you can create a small web crawler using requests and BeautifulSoup. This will give you a clear idea of what exactly a web crawler is. Then we will create a production-ready web crawler using Scrapy.

What is web crawling?

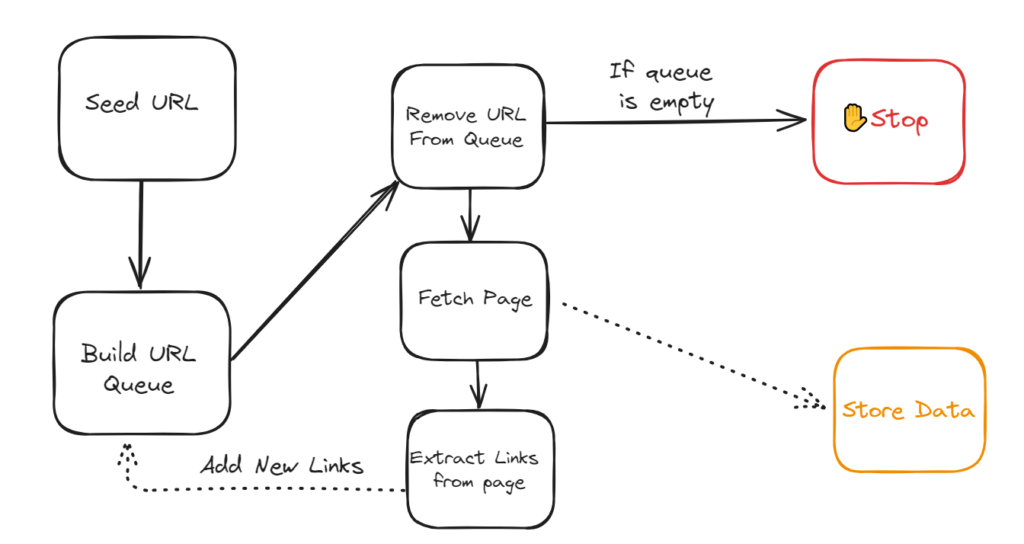

Web crawling is an automated bot whose job is to visit multiple URLs on a single website or multiple websites and download content from those pages. Then this data can be used for multiple purposes like price analysis, indexing on search engines, monitoring changes on websites, etc.

It all starts with the seed URL which is the entry point of any web crawler. The web crawler then downloads HTML content from the page by making a GET request. The data downloaded is now parsed using various html parsing libraries to extract the most valuable data from it.

Extracted data might contain links to other pages on the same website. Now, the crawler will make GET requests to these pages as well to repeat the same process that it did with the seed URL. Of course, this process is a recursive process that enables the script to visit every URL on the domain and gather all the information available.

How web crawling is different from web scraping?

Web scraping and web crawling might sound similar but there is a fine line between them which makes them very different.

Web scraping involves making a GET request to just one single page and extracting the data present on the page. It will not look for other URLs available on the page. Of course, web scraping is comparatively fast because it works on a single page only.

Read More: What is Web Scraping?

Web Crawling using Requests & BeautifulSoup

In my experience, the combination of requests and BS4 is the best when it comes to downloading and parsing the raw HTML. If you want to learn more about the best libraries for web scraping with Python then check out this guide,

In this section, we will create a small crawler for this website. So, according to the flowchart shown above the crawler will look for links right from the seed URL. The crawler will then go to each link and extract data.

Let’s first download these two libraries in the coding environment.

pip install requests

pip install bs4

We will be using another library urllib.parse but since it is a part of the Python standard library, there is no need for installation.

A basic Python crawler for our target website will look like this.

import requests

from bs4 import BeautifulSoup

from urllib.parse import urljoin

# URL of the website to crawl

base_url = "https://books.toscrape.com/"

# Set to store visited URLs

visited_urls = set()

# List to store URLs to visit next

urls_to_visit = [base_url]

# Function to crawl a page and extract links

def crawl_page(url):

try:

response = requests.get(url)

response.raise_for_status() # Raise an exception for HTTP errors

soup = BeautifulSoup(response.content, "html.parser")

# Extract links and enqueue new URLs

links = []

for link in soup.find_all("a", href=True):

next_url = urljoin(url, link["href"])

links.append(next_url)

return links

except requests.exceptions.RequestException as e:

print(f"Error crawling {url}: {e}")

return []

# Crawl the website

while urls_to_visit:

current_url = urls_to_visit.pop(0) # Dequeue the first URL

if current_url in visited_urls:

continue

print(f"Crawling: {current_url}")

new_links = crawl_page(current_url)

visited_urls.add(current_url)

urls_to_visit.extend(new_links)

print("Crawling finished.")

It is a very simple code but let me break it down and explain it to you.

- We import the required libraries:

requests,BeautifulSoup, andurljoinfromurllib.parse. - We define the

base_urlof the website and initialize a setvisited_urlsto store visited URLs. - We define a

urls_to_visitlist to store URLs that need to be crawled. We start with the base URL. - We define the

crawl_page()function to fetch a web page, parse its HTML content, and extract links from it. - Inside the function, we use

requests.get()to fetch the page andBeautifulSoupto parse its content. - We iterate through each

<a>tag to extract links, and convert them to absolute URLs usingurljoin(), and add them to thelinkslist. - The

whileloop continues as long as there are URLs in theurls_to_visitlist. For each URL, we:

- Dequeue the URL and check if it has been visited before.

- Call the

crawl_page()function to fetch the page and extract links. - Add the current URL to the

visited_urlsset and enqueue the new links tourls_to_visit.

8. Once the crawling process is complete, we print a message indicating that the process has finished.

To run this code you can type this command on bash. I have named my file crawl.py.

python crawl.py

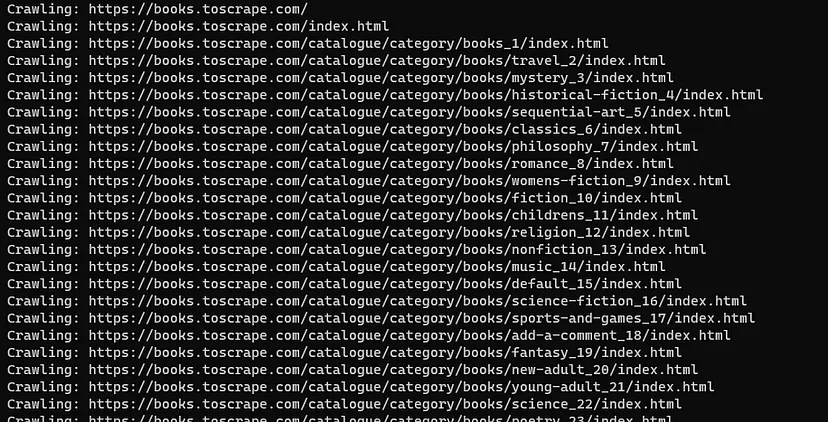

Once your crawler starts, this will appear on your screen.

This code might give you an idea of how web crawling works. However, there are certain limitations and potential disadvantages to this code.

- No Parallelism: The code does not utilize parallel processing, meaning that only one request is processed at a time. Parallelizing the crawling process can significantly improve the speed of crawling.

- Lack of Error Handling: The code lacks detailed error handling for various scenarios, such as handling specific HTTP errors, connection timeouts, and more. Proper error handling is crucial for robust crawling.

- Depth-First Crawling: The code uses a breadth-first approach, but in certain cases, depth-first crawling might be more efficient. This depends on the structure of the website and the goals of the crawling operation. If you want to learn more about BFS and DFS then read this guide. BFS looks for the shortest path to reach the destination.

In the next section, we are going to create a web crawler using Scrapy which will help us eliminate these limitations.

Web Crawler using Scrapy

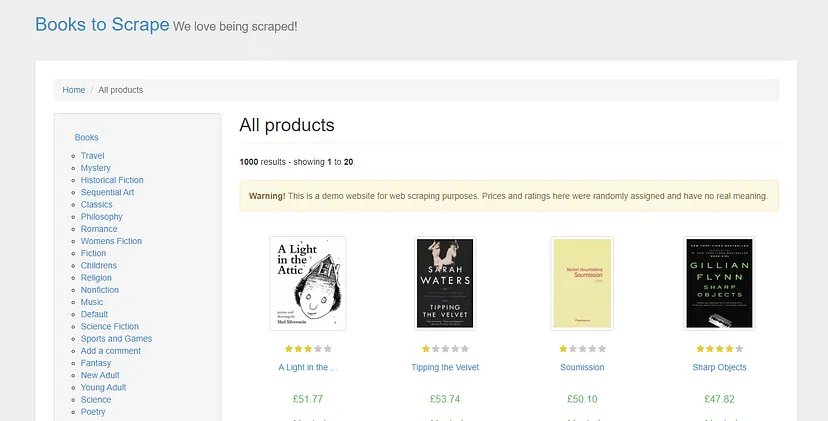

Again we are going to use the same site for crawling with Scrapy.

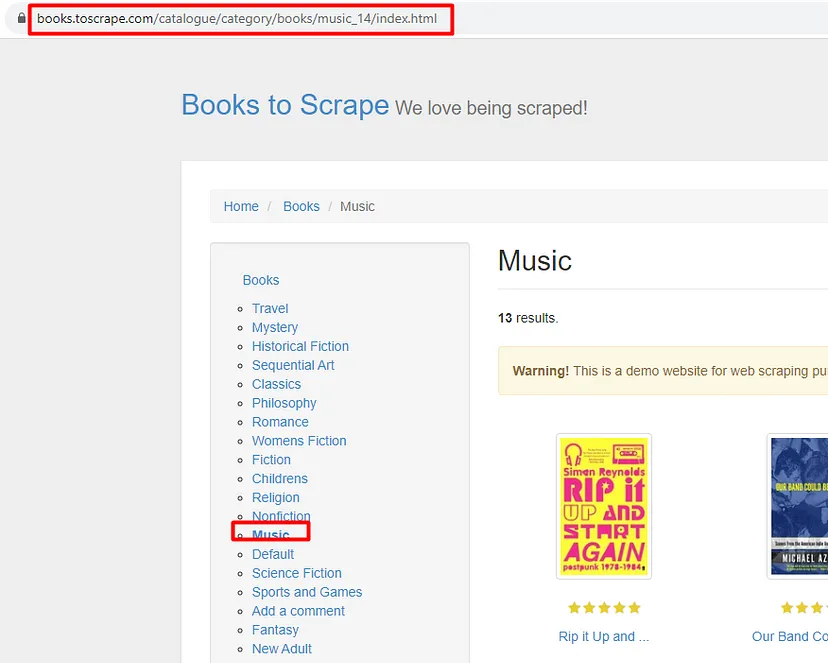

This page has a lot of information like warnings, titles, categories, etc. Our task would be to find links that match a certain pattern. For example, when we click on any of the categories we can see a certain pattern in the URL.

Every URL will have /catalogue, /category and /books. But when we click on a book we only see /catalogue and nothing else.

So, one task would be to instruct our web crawler to find all the links that have this pattern. The web crawler would then follow to find all the available links with /catalogue/category patterns in them. That would be the mission of our web crawler.

In this case, it would be quite trivial because we have a sidebar where all the categories are listed but in a real-world project, you will oftentimes have something like maybe the top 10 categories that you can click and then there are a hundred more categories that you have to find by for example going into a book and then you have another 10 sub-categories of the book present on that book page.

So, you can instruct the crawler to go into all the different book pages to find all the secondary categories and then collect all the pages that are category pages.

This could be the web crawling task and the web scraping task could be to collect titles and prices of the books from each dedicated book page. I hope you got the idea now. Let’s proceed with the coding part!

We will start with downloading Scrapy. It is a web scraping or web crawling framework. It is not just a simple library but an actual framework. Once you download this it will create multiple python files in your folder. You can type the following command in your cmd to install it.

pip install scrapy

Once you install it you can go back to your working directory and run this command.

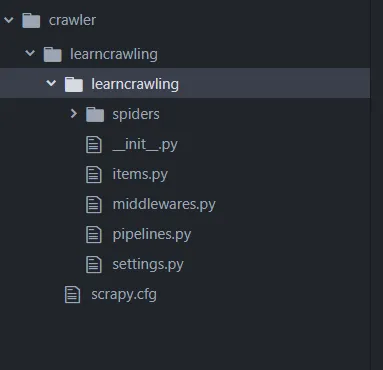

scrapy startproject learncrawling

You can of course use whatever name you like. I have used learncrawling. Once the project is created you can see that in your chosen directory you have a new directory with the project name inside it.

You will see a bunch of other Python files as well. We will cover some of these files later in this blog. But the most important directory here is the spider’s directory. These are actually the constructs that we use for the web crawling process.

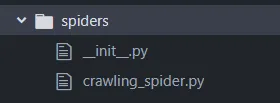

We can create our own custom spiders to define our own crawling process. We are going to create a new Python file inside this.

In this file, we are going to write some code.

from scrapy.spiders import CrawlSpider, Rule

from scrapy.linkextractors import LinkExtractor

from scrapy.spider import CrawlSpider: This line imports theCrawlSpiderclass from thescrapy.spidermodule.CrawlSpideris a subclass of the baseSpiderclass provided by Scrapy. It is used to create spider classes specifically designed for crawling websites by following links.Ruleis used to define rules for link extraction and following.from scrapy.linkextractors import LinkExtractor: This line imports theLinkExtractorclass from thescrapy.linkextractorsmodule.LinkExtractoris a utility class provided by Scrapy to extract links from web pages based on specified rules and patterns.

Then we want to create a new class which is going to be our custom spider class. I’m going to call this class CrawlingSpider and this is going to inherit from the CrawlSpider class.

from scrapy.spiders import CrawlSpider, Rule

from scrapy.linkextractors import LinkExtractor

class CrawlingSpider(CrawlSpider):

name = "mycrawler"

allowed_domains = ["toscrape.com"]

start_urls = ["https://books.toscrape.com/"]

rules = (

Rule(LinkExtractor(allow="catalogue/category")),

)

name = "mycrawler": This attribute specifies the name of the spider. The name is used to uniquely identify the spider when running Scrapy commands.

allowed_domains = ["toscrape.com"]: This attribute defines a list of domain names that the spider is allowed to crawl. In this case, it specifies that the spider should only crawl within the domain “toscrape.com”.

start_urls = ["https://books.toscrape.com/"]: This attribute provides a list of starting URLs for the spider. The spider will begin crawling from these URLs.

Rule(LinkExtractor(allow="catalogue/category")),: This line defines a rule using the Rule class. It utilizes a LinkExtractor to extract links based on the provided rule. The allow parameter specifies a regular expression pattern that is used to match URLs. In this case, it’s looking for URLs containing the text “catalogue/category”.

scrapy crawl mycrawler

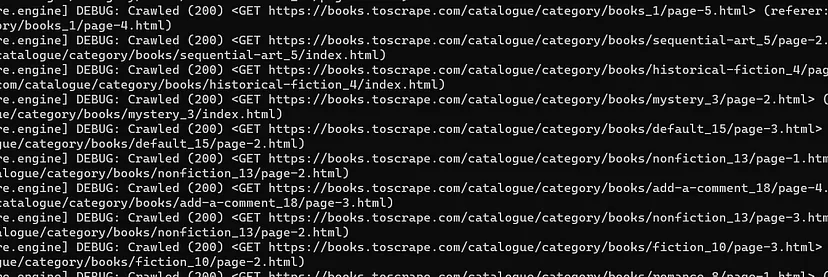

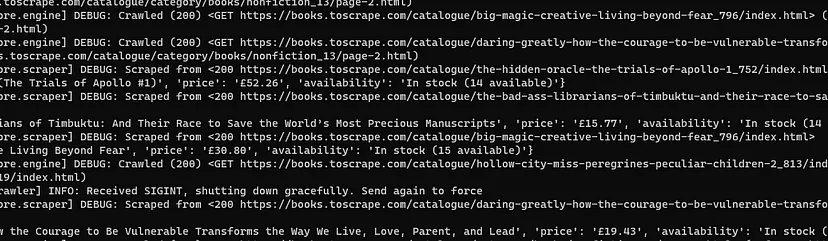

Once it starts running you will see something like this on your bash.

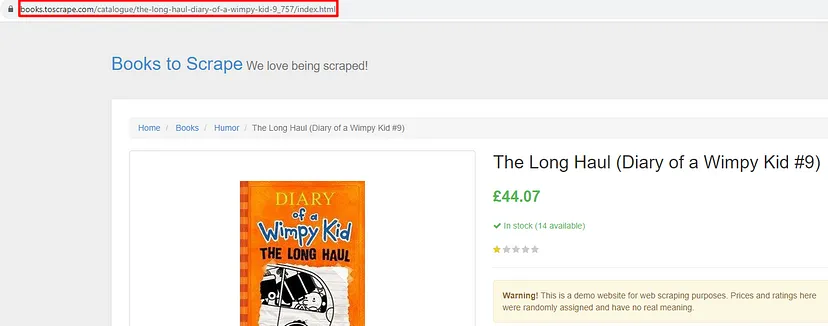

Our crawler is finding all these urls. But what if we want to find links to individual book pages as well and we want to scrape certain information from that?

Now, we will look for certain things in our spiders and we will extract some data from each individual book page. For finding book pages I will define a new rule.

Rule(LinkExtractor(allow="catalogue", deny="category"), callback="parse_item")

This will find all the URLs with catalogue in it but it will deny the pages with category in them. Then we use a callback function to pass all of our crawled urls. This function will then handle the web scraping part.

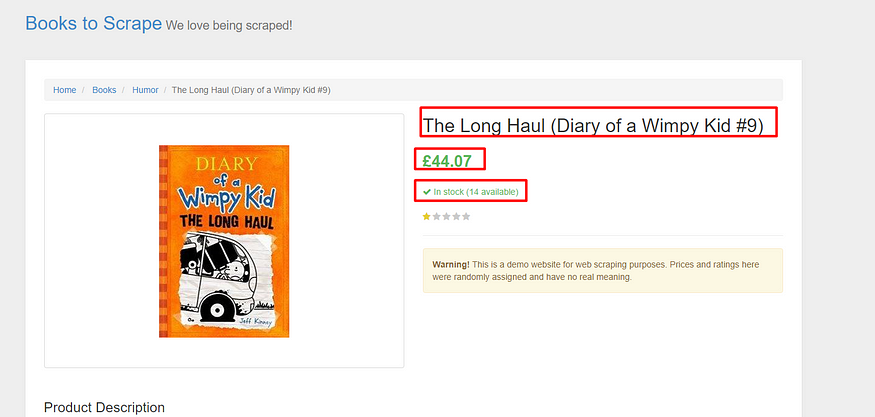

What Will We Scrape

We are going to scrape:

- Title of the book

- Price of the book

- Availability

Let’s find out their DOM locations one by one.

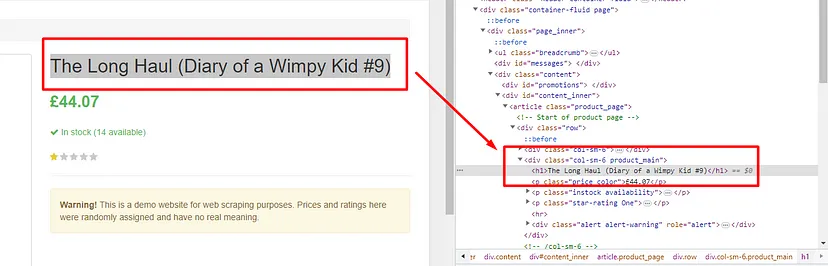

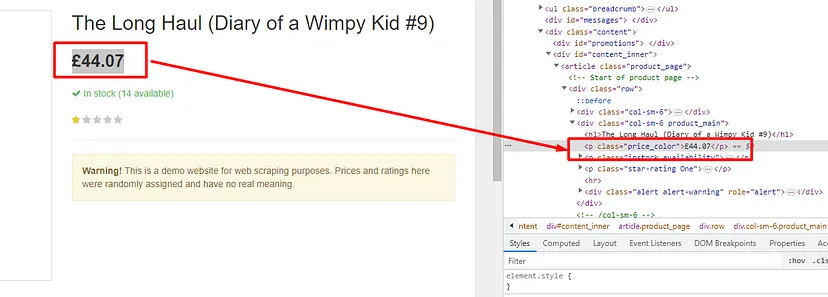

The title can be seen under the class product_main with h1 tags.

Pricing can be seen under the p tag with class price_color.

Availability can be seen under the p tag with class availability.

Let’s put all this under the code under parse_item() function.

def parse_item(self,response):

yield {

"title":response.css(".product_main h1::text").get(),

"price":response.css(".price_color::text").get(),

"availability":response.css(".availability::text")[1].get().strip()

}

yield { ... }: This line starts a dictionary comprehension enclosed within curly braces. This dictionary will be yielded as the output of the method, effectively passing the extracted data to Scrapy’s output pipeline."title": response.css(".product_main h1::text").get(): This line extracts the text content of the<h1>element within the.product_mainclass using a CSS selector. The::textpseudo-element is used to select the text content. The.get()method retrieves the extracted text."price": response.css(".price_color::text").get(): This line extracts the text content of the element with the.price_colorclass, similar to the previous line."availability": response.css(".availability::text")[1].get().strip(): This line extracts the text content of the third element with the.availabilityclass on the page.[2]indicates that we’re selecting the third matching element (remember that indexing is zero-based). The.get()method retrieves the text content. Thestrip()function is used to remove the white spaces.

Our spider is now ready and we can run it from our terminal. Once again we are going to use the same command.

scrapy crawl mycrawler

Let’s run it and see the results. I am pretty excited.

Our spider scrapes all the books and goes through all the instances that it can find. It will probably take some time but at the end, you will see all the extracted data. You can even notice the dictionary that is being printed, it contains all the data we wanted to scrape.

What if I want to save this data?

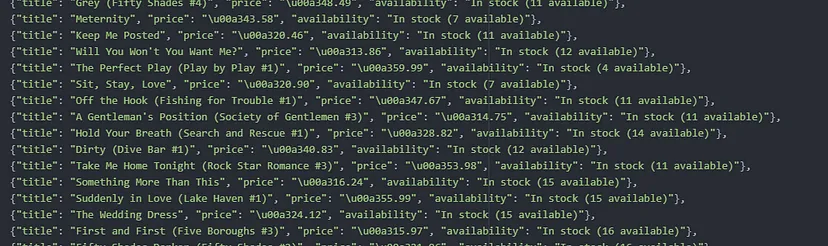

Saving the data in a JSON file

You can save this data into a JSON file very easily. You can do it directly from the bash, no need to make any changes in the code.

scrapy crawl mycrawler -o results.json

This will then take all the scraped information and save it in a JSON file.

We have got the title, price, and availability. You can of course play with it a little and extract the integer from the availability string using regex. But my purpose in this was to explain to you how it can be done fast and smoothly.

Web Crawling with Proxy

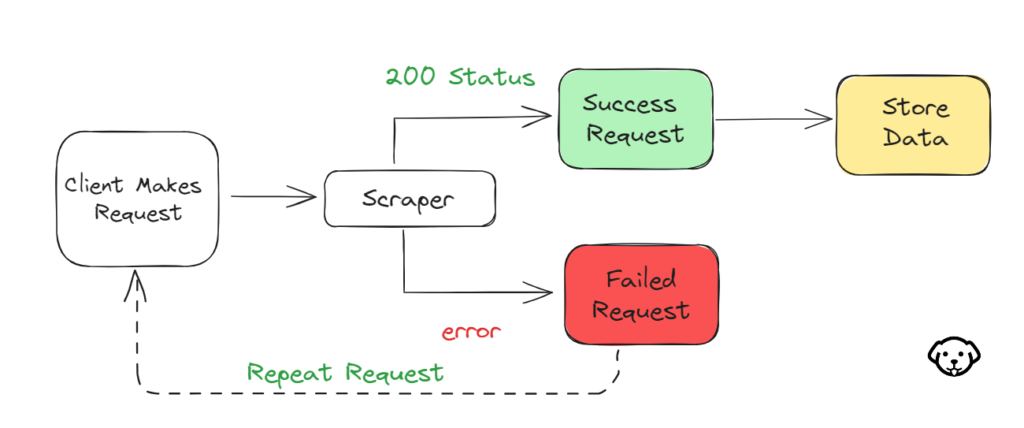

One problem you might face in your web crawling journey is you might get blocked from accessing the website. This happens because you might be sending too many requests to the website due to which it might ban your IP. This will put a breakage to your data pipeline.

In order to prevent this you can use proxy services that can handle IP rotations, and retries, and even pass appropriate headers to the website to act like a legit person rather than a data-hungry bot(of course we are).

You can sign up for the free datacenter proxy. You will get 1,000 free credits to run a small crawler. Let’s see how you can integrate this proxy into your Scrapy environment. There are mainly 3 steps involved while integrating your proxy in this.

- Define a constant

PROXY_SERVERin yourcrawler_spider.pyfile.

from scrapy.spiders import CrawlSpider, Rule

from scrapy.linkextractors import LinkExtractor

class CrawlingSpider(CrawlSpider):

name = "mycrawler"

allowed_domains = ["toscrape.com"]

start_urls = ["https://books.toscrape.com/"]

PROXY_SERVER = "http://scrapingdog:Your-API-Key@proxy.scrapingdog.com:8081"

rules = (

Rule(LinkExtractor(allow="catalogue/category")),

Rule(LinkExtractor(allow="catalogue", deny="category"), callback="parse_item")

)

def parse_item(self,response):

yield {

"title":response.css(".product_main h1::text").get(),

"price":response.css(".price_color::text").get(),

"availability":response.css(".availability::text")[1].get().strip()

}

1. Then we will move to settings.py file. Find the downloader middlerwares section in this file and uncomment it.

DOWNLOADER_MIDDLEWARES = {

'scrapy.downloadermiddlewares.httpproxy.HttpProxyMiddleware':1,

'learncrawling.middlewares.LearncrawlingDownloaderMiddleware': 543,

}

2. Then we will move to settings.py file. Find the downloader middlerwares section in this file and uncomment it.

DOWNLOADER_MIDDLEWARES = {

'scrapy.downloadermiddlewares.httpproxy.HttpProxyMiddleware':1,

'learncrawling.middlewares.LearncrawlingDownloaderMiddleware': 543,

}

This will enable the use of a proxy.

3. Then the final step would be to make changes in our middlewares.py file. In this file, you will find a class by the name LearncrawlingDownloaderMiddleware. From here we can manipulate the process of request-sending by adding the proxy server.

Here you will find a function process_request() under which you have to add the below line.

request.meta['proxy'] = "http://scrapingdog:Your-API-Key@proxy.scrapingdog.com:8081"

return None

Now, every request will go through a proxy and your data pipeline will not get blocked.

Of course, changing just the IP will not help you bypass the anti-scraping wall of any website therefore this proxy also passes custom headers to help you penetrate that wall.

Now, Scrapy has certain limits when it comes to crawling. Let’s say if you are crawling websites like Amazon using using Scrapy then you can scrape around 350 pages per minute(according to my own experiment). That means 50400 pages per day. This speed is not enough if you want to scrape millions of pages in just a few days. I came across this article where the author scraped more than 250 million pages within 40 hours. I would recommend reading this article.

In some cases, you might have to wait to make another request like zoominfo.com. For that, you can use DOWNLOAD_DELAY to give your crawler a little rest. You can read more about it here. This is how you can add this to your code.

class MySpider(scrapy.Spider):

name = 'my_spider'

start_urls = ['https://example.com']

download_delay = 1 # Set the delay to 1 second

Then you can use CONCURRENT_REQUESTS to control the number of requests you want to send at a time. You can read more about it here.

You can also use ROBOTSTXT_OBEY to obey the rules set by the domain owners about data collection. Of course, as a data collector, you should respect their boundaries. You can read more about it here.

Complete Code

There are multiple data points available on this website which can also be scraped. But for now, the complete code for this tutorial will look like this.

//crawling_spider.py

from scrapy.spiders import CrawlSpider, Rule

from scrapy.linkextractors import LinkExtractor

class CrawlingSpider(CrawlSpider):

name = "mycrawler"

allowed_domains = ["toscrape.com"]

start_urls = ["http://books.toscrape.com/"]

rules = (

Rule(LinkExtractor(allow="catalogue/category")),

Rule(LinkExtractor(allow="catalogue", deny="category"), callback="parse_item")

)

def parse_item(self,response):

yield {

"title":response.css(".product_main h1::text").get(),

"price":response.css(".price_color::text").get(),

"availability":response.css(".availability::text")[1].get().strip()

}

Conclusion

In this blog, we created a crawler using requests and Scrapy. Both are capable of achieving the target but with Scrapy you can complete the task fast. Scrapy provides you flexibility through which you can crawl endless websites with efficiency. Beginners might find Scrapy a little intimidating but once you get it you will be able to crawl websites very easily.

I hope now you clearly understand the difference between web scraping and web crawling. The parse_item() function is doing web scraping once the URLs are crawled.

I think you are now capable of crawling websites whose data matters. You can start with crawling Amazon and see how it goes. You can start by reading this guide on web scraping Amazon with Python.

I hope you like this tutorial and if you do then please do not forget to share it with your friends and on your social media.